The recent report by Human Rights Watch revealed the distressing reality that over 170 images and personal details of children from Brazil have been scraped without their knowledge or consent. These images have been used to train AI models, raising serious concerns about the violation of children’s privacy rights. The dataset containing these images, known as LAION-5B, has been a popular source of training data for AI startups, highlighting the prevalence of such unethical practices in the tech industry.

The images of children included in the LAION-5B dataset were scraped from a wide range of content, including mommy blogs, personal blogs, maternity blogs, parenting blogs, and even stills from YouTube videos. These images were posted with the expectation of privacy, making the unauthorized use of them particularly invasive. The fact that most of these images could not be found through a reverse image search further emphasizes the extent of the violation of privacy that has occurred.

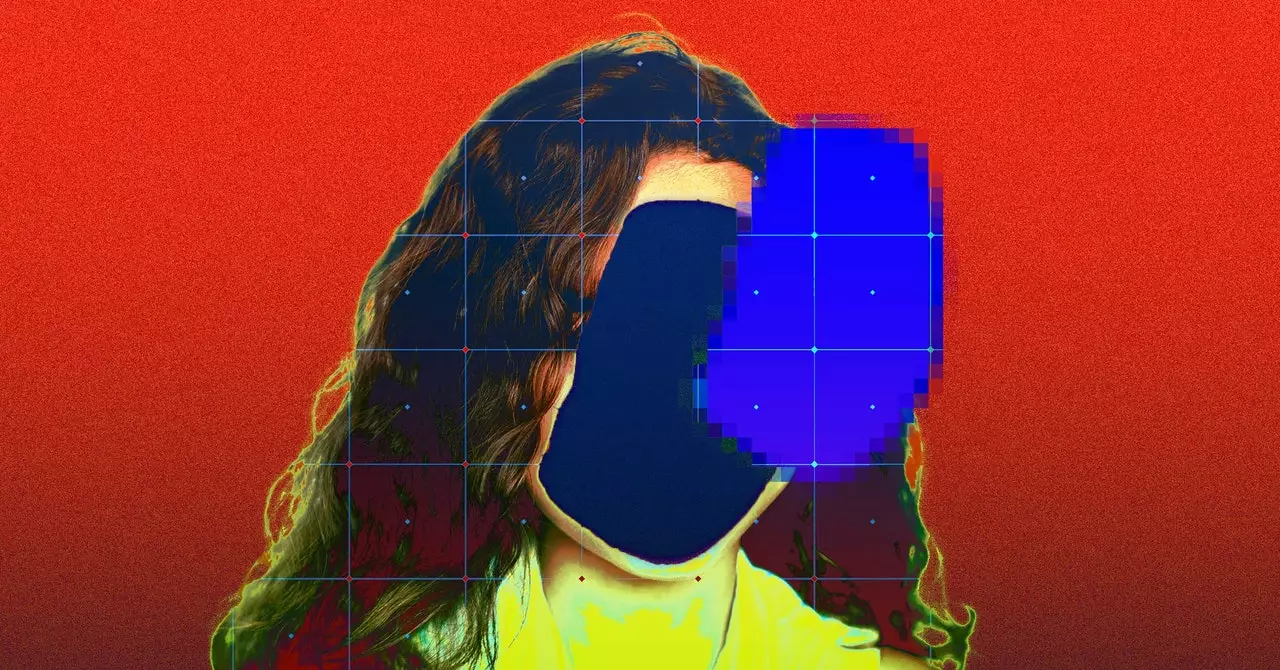

The implications of using children’s images to train AI models are far-reaching and troubling. According to Hye Jung Han, a children’s rights and technology researcher at Human Rights Watch, the AI tools trained on such datasets can create realistic imagery of children, putting them at risk of manipulation by malicious actors. This raises concerns not only about the creation of explicit deepfakes but also about the potential exposure of sensitive information such as locations or medical data stored in the images.

In response to the Stanford report that identified links to illegal content in the LAION-5B dataset, the organization responsible for the dataset, LAION, has taken steps to address the issue. LAION-5B has been taken down, and the organization is working with various entities, including the Internet Watch Foundation, the Canadian Centre for Child Protection, Stanford, and Human Rights Watch, to remove all references to illegal content. However, the fact that these images were included in the dataset in the first place raises questions about the effectiveness of the initial screening process.

The unauthorized scraping of content from YouTube, which was one of the sources of the children’s images, is a clear violation of the platform’s terms of service. YouTube has reiterated its stance on this issue, stating that it will continue to take action against such abuses. The use of children’s images without consent for training AI models not only violates ethical standards but also contravenes the terms of service of major platforms like YouTube.

Beyond the immediate implications of using children’s images in AI training datasets, there are broader concerns about the potential misuse of such technology. The rise of explicit deepfakes, especially in school settings where they are used for bullying, highlights the urgent need to address these issues. The case of a US-based artist finding her own image in the LAION dataset from her private medical records underscores the need for greater accountability and transparency in the use of personal data in AI development.

The violation of children’s privacy in AI training datasets is a serious issue that requires immediate attention and action. The unconsented use of children’s images for training AI models not only compromises their privacy rights but also poses significant risks of manipulation and exploitation. It is imperative for organizations and regulators to enforce stricter guidelines and regulations to prevent such unethical practices and safeguard the privacy and rights of children in the digital age.

Leave a Reply