Cognitive flexibility, the ability to switch between different thoughts and mental concepts rapidly, is a crucial human capability. This capability enables multitasking, quick skill acquisition, and adaptation to new situations. While artificial intelligence (AI) systems have made significant advancements in recent years, they still lack the same level of flexibility as humans when it comes to learning new skills and switching between tasks.

Recent research has focused on understanding how biological neural circuits support cognitive flexibility, particularly in the context of multitasking. Computer scientists and neuroscientists have started studying neural computations using artificial neural networks, aiming to develop AI systems that can tackle multiple tasks efficiently.

In 2019, a research group from New York University, Columbia University, and Stanford University trained a neural network to perform 20 related tasks. The researchers aimed to investigate what allows the neural network to perform modular computations, enabling it to tackle various tasks effectively.

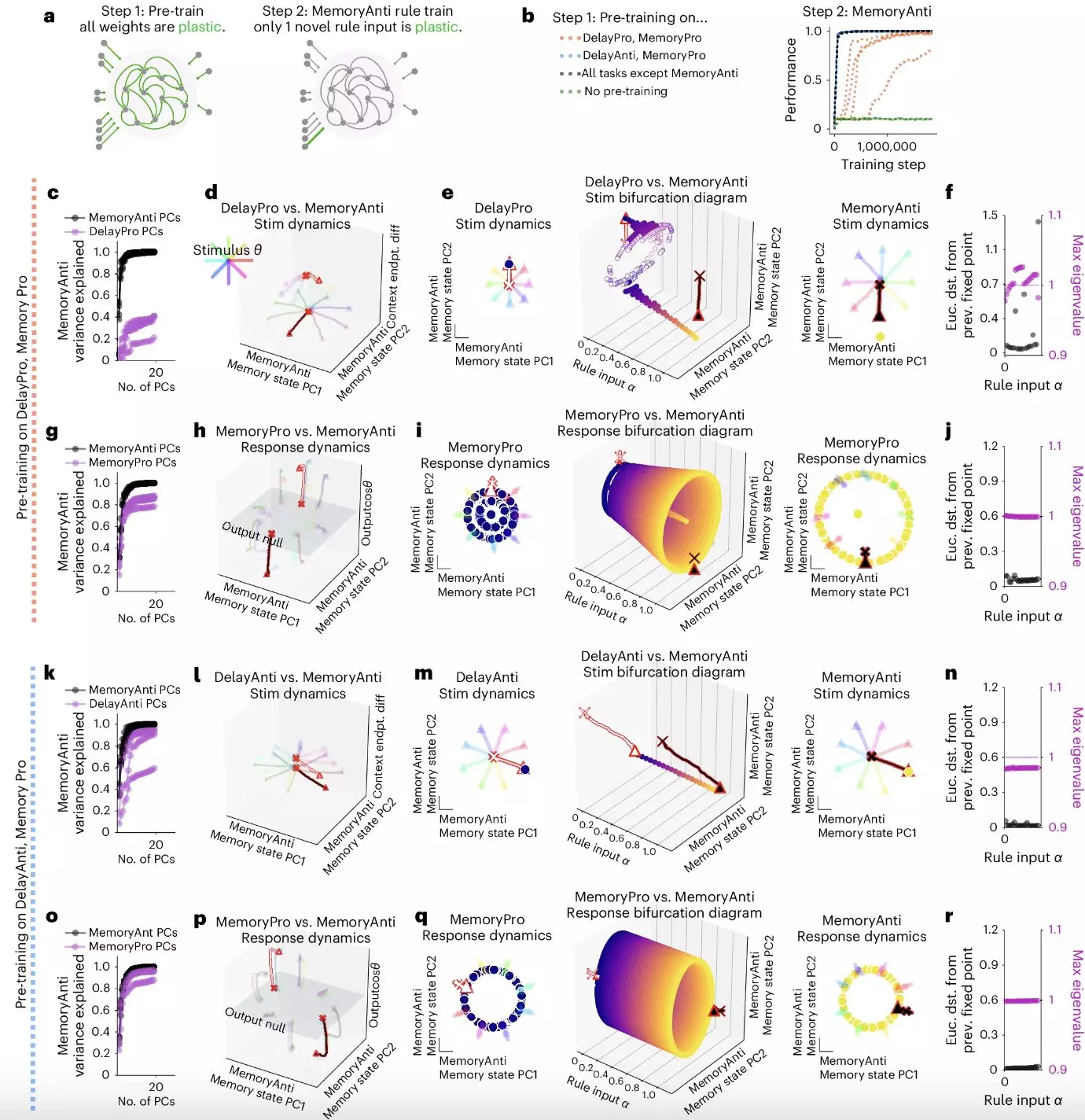

The recent study by Laura N. Driscoll, Krishna Shenoy, and David Sussillo focused on exploring the mechanisms underlying the computations of recurrently connected artificial neural networks. The researchers identified a computational substrate in these networks that enables modular computations, which they referred to as “dynamical motifs.”

The researchers found that dynamical motifs are recurring patterns of neural activity that implement specific computations through dynamics, such as attractors, decision boundaries, and rotations. These motifs were reused across tasks, indicating a level of flexibility in neural network computations.

The study also revealed that lesions to specific units in convolutional neural networks affected the networks’ ability to perform modular computations. The researchers found that motifs were reconfigured for fast transfer learning after an initial learning phase, emphasizing the adaptability of neural networks.

The findings of this research have significant implications for both neuroscience and computer science. Understanding the neural processes that underpin cognitive flexibility in neural networks can lead to the development of new strategies that emulate these processes in AI systems, ultimately improving their efficiency in tackling multiple tasks.

Overall, the study by Driscoll, Shenoy, and Sussillo highlights the importance of cognitive flexibility and modular computation in neural networks. By identifying dynamical motifs as a fundamental unit of compositional computation, the researchers have opened up new avenues for research in the fields of neuroscience and artificial intelligence.

Leave a Reply