Artificial Intelligence (AI) has dramatically transformed various fields, especially through the development of large language models (LLMs) like GPT-4. These models have integrated into everyday technology, facilitating tasks such as text generation, coding, chatbot interaction, and translation. Underpinning this remarkable capability is a straightforward principle: the models operate on the basis of predicting the next word in a sequence by analyzing the preceding words. However, a recent exploration reveals a compelling asymmetry in language comprehension—LLMs are notably more adept at anticipating subsequent words than deducing previous ones, an intriguing phenomenon dubbed the “Arrow of Time” effect.

Researchers Clemént Hongler and Jérémie Wenger embarked on a journey to investigate this peculiar behavior of language models. Their study involved asking the question of whether these models could construct narratives backward, starting from an ending and moving towards a beginning. Partnering with machine learning expert Vassilis Papadopoulos, they conducted experiments across various models, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) architectures. The results were striking—time and again, the models displayed consistent difficulties in backward word prediction compared to their prowess in forward prediction.

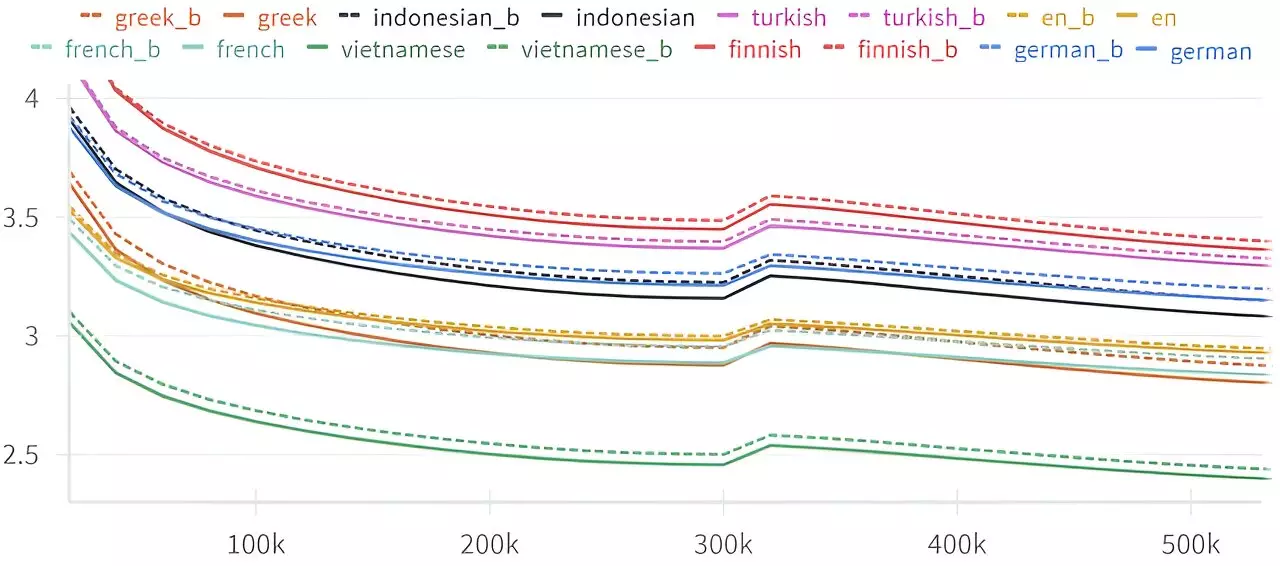

Hongler provided insight into their findings, stating, “While large language models can predict both next and previous words, they do so with slightly diminished accuracy when working backward.” This consistent underperformance in reverse prediction, observed across multiple languages and model architectures, hints at an inherent structural asymmetry in language processing. This asymmetry resonates with past observations made by Claude Shannon, the pioneer of Information Theory, who similarly addressed the comparative difficulty of predicting letters in a sequence.

The implications of this “Arrow of Time” effect stretch beyond mere linguistic curiosities. Hongler’s findings suggest that LLMs exhibit a degree of sensitivity to temporal direction, illuminating a fundamental aspect of the language’s structure that has only become apparent in the current era of advanced AI. This profound discovery indicates that language might not merely be a medium for communication but may also embody properties related to our understanding of intelligence, causality, and physics.

Furthermore, the researchers postulate that this property could be leveraged in identifying intelligent behavior in machines. If large language models can reveal deeper connections between language processing and intelligence, there exists potential for enhancing their design, thereby creating even more sophisticated AI systems. This could also contribute to ongoing philosophical and scientific inquiries regarding the nature of time—prompting further exploration of how time manifests itself both in language and in our physical reality.

The study also aligns with Shannon’s earlier work, which suggested that while predicting letters in a sequence should theoretically pose equal difficulty in both forward and backward directions, human experience reveals otherwise. Although the performance gap observed was minimal, it raises significant questions regarding the cognitive mechanisms at play in our understanding of language. This could offer evidence that human intuition about language processing is shaped by more complex underlying principles.

The researchers assert that the disparity between forward and backward prediction might reflect deeper characteristics of narrative construction and comprehension. As Hongler emphasizes, “The capacity of language models to process narratives may underscore an intricate relationship between language, causality, and the intrinsic qualities of intelligence.” This perspective invites a more nuanced understanding of both linguistic models and our interaction with time itself.

The inception of this research reportedly traces back to a unique collaboration with a theater school, where the aim was to develop an AI-driven chatbot that could engage in improvisational storytelling. The challenge was to craft narratives that led to a predetermined conclusion—a task that required the chatbot to operate in reverse, initiating from an anticipated ending. The researchers’ initial observations of the models’ backward prediction deficiencies led to the broader revelation regarding the structural attributes of language itself.

Hongler’s excitement about the project not only stems from the integration of AI into creative endeavors but also from the profound surprises unearthed along the way. The interplay between language, technology, and understanding time could thus lead to innovative applications in both creative fields and academic research, ultimately enriching our comprehension of cognition and communication.

The study conducted by Hongler, Wenger, and Papadopoulos sheds light on a fascinating aspect of language modeling, revealing how forward predictive capabilities of LLMs stretch beyond mere functionality to embody deeper insights into our understanding of language, intelligence, and time. As researchers continue to unravel these complexities, we may find ourselves on the cusp of revolutionary advancements in both AI technology and our philosophical contemplations about existence and knowledge.

Leave a Reply