Despite the vast strides made in artificial intelligence (AI), the field of robotics remains markedly underwhelming when it comes to practical applications. While machines have become increasingly sophisticated in processing information and performing specialized tasks, the reality is that many robots in industrial settings continue to operate within narrowly defined parameters. They perform repetitive, pre-programmed actions and exhibit minimal awareness of their environment. This begs the question: why have these highly touted advancements in AI not seamlessly transitioned into the realm of robotics?

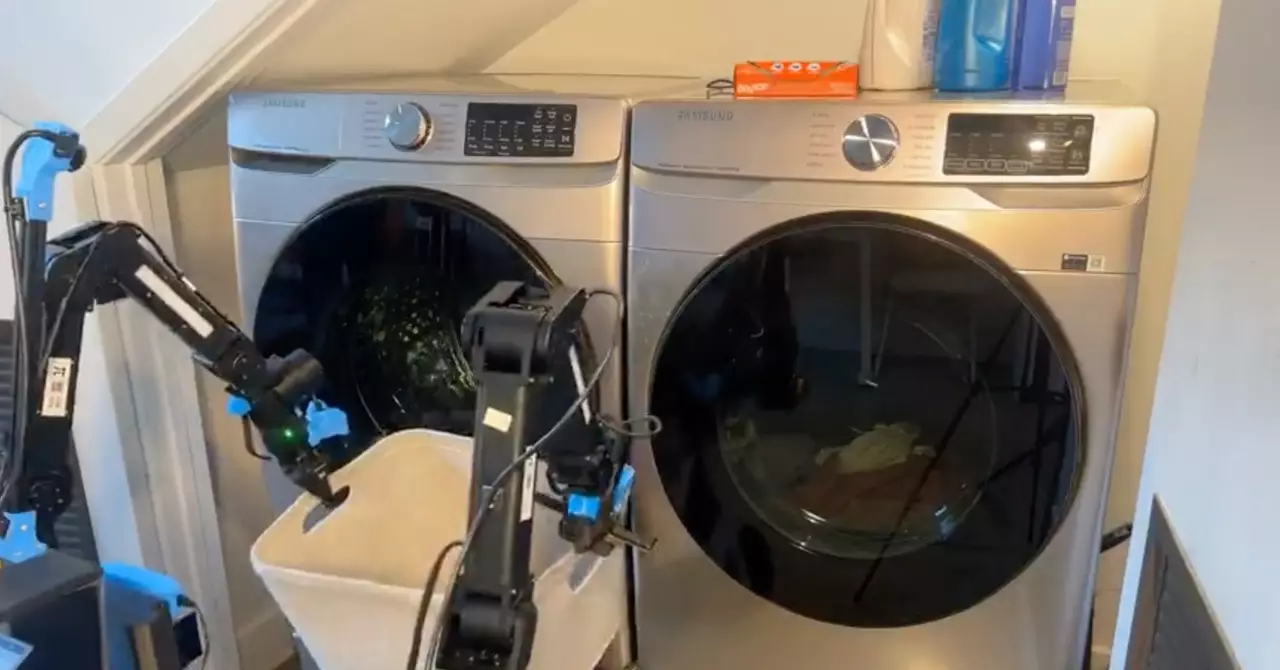

The majority of robots deployed in factories and warehouses lack the flexibility needed to adapt to their surroundings. While some machines do have basic perceptual abilities, such as recognizing and grasping objects, their functional capacities are still severely limited. These robots exhibit a deficit in what is known as general physical intelligence, which hampers their dexterity and proficiency. In an industry that thrives on efficiency and versatility, this limitation presents a significant barrier that prevents robots from fulfilling a broader spectrum of tasks.

There is an emerging consensus that for robots to become truly useful, especially in the context of human environments like homes, they must develop more generalized capabilities. Optimism about AI advancements has seeped into the public psyche, spurred on by ambitious projects like Tesla’s humanoid robot, Optimus. Elon Musk’s vision suggests that this robot could become commercially available by 2040, bringing the price tag to approximately $20,000 to $25,000. While these predictions are thrilling, they raise skepticism regarding the feasibility of creating a robot that can effectively manage the complexities inherent in everyday tasks.

Traditional approaches to robotics often involved training one machine to execute one task. This had been the norm due to the belief that skills learned could not easily be transferred between different machines or tasks. However, recent academic studies have begun to challenge this notion. Initiatives like Google’s Open X-Embodiment project, which shared insights and learning capabilities between 22 different robots across 21 research facilities, indicate that it may be possible to cultivate more adaptable robots.

One of the prominent hurdles in developing sophisticated robots is the lack of extensive datasets needed for training. Unlike large language models, which benefit from a copious amount of textual data, robots often work with far less information, limiting their learning curve. To counteract this, efforts are underway to synthesize training data and employ innovative techniques that can glean insights from smaller datasets. Physical Intelligence, for instance, has adopted a mixed approach that combines vision-language models with diffusion modeling strategies from AI-driven image generation to foster more comprehensive learning pathways.

The road to enhanced robotic capabilities is fraught with challenges, but innovation continues to pave the way for future advancements. As researchers throughout the globe work to widen the ambit of what robots can achieve, it becomes clear: while the scaffolding of progress is present, much work remains to be done. The promise of intelligent, adaptable robots is tempting, yet the realization of this potential will require not just technological advancements, but also an understanding of how to bridge the considerable gaps in learning and adaptability. The vision — that robots could proficiently handle any task put before them — is ambitious, but with significant effort, it may become a reality.

Leave a Reply