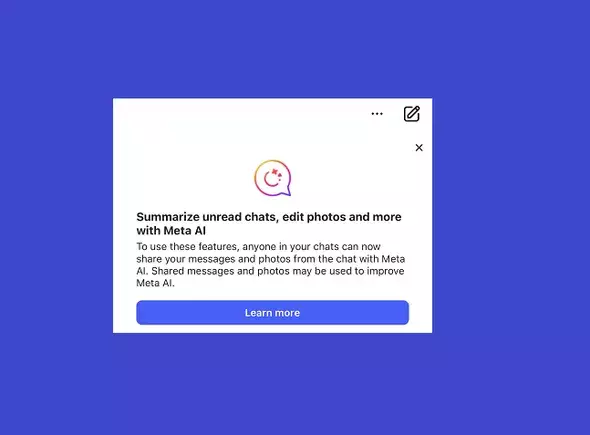

In a somewhat alarming development, Meta has begun processing direct messages (DMs) through its newly introduced AI tools, inciting a wave of concerns among users regarding their privacy. Reports have emerged of a notification within Meta’s apps, unveiling the arrival of AI chat capabilities that allow users to pose queries directly during conversations on platforms like Facebook, Instagram, Messenger, and WhatsApp. While the functionality can arguably enhance user experience by providing instant assistance, it brings forth significant implications regarding data privacy that users must be acutely aware of.

As users engage with this new feature by mentioning “@MetaAI” during chats, they unwittingly grant access to their conversations. This raises a troubling question: Do users fully comprehend the implications of this integration? The notification highlights a critical point: if other chat participants utilize Meta AI, any shared messages, including sensitive data such as passwords or financial details, may end up in Meta’s AI training dataset. The app attempts to mitigate this risk by stating it tries to anonymize personal identifiers before employing any content to refine its AI systems. However, can users genuinely trust this process?

The message accompanying the new feature attempts to strike a reassuring tone, yet it inadvertently highlights a fundamental flaw in Meta’s approach: the burden of awareness has been shifted onto the user. It advises caution, suggesting that users refrain from sharing sensitive information in chats if they wish to avoid potential AI exposure. This leads to a paradox — while seeking the convenience of AI support, users must simultaneously navigate a minefield of privacy concerns.

Critics argue that the benefits of having Meta AI available in chat are insufficient to justify the potential risks associated with sharing private messages. In essence, the value added by interpreting a simple chat inquiry is undermined by the anxiety it generates regarding data security. Alternatives exist where users can engage with Meta AI separately, thus eliminating the threat of unintended data exposure. This raises another important discussion: why does Meta insist on embedding its AI directly within user chats, where the stakes of privacy are significantly higher, rather than encouraging users to utilize it in a more secure, standalone environment?

Moreover, many users may not fully understand that by simply using Meta’s platforms, they’ve already agreed to permit the company to utilize their information as laid out in the lengthy terms-of-service document few read thoroughly. The implication is clear—this AI integration didn’t just appear overnight but rather is the result of pre-existing permissions granted during the sign-up process. Therefore, any user lamenting this development needs to recognize that escaping this web of consent is practically impossible unless they choose to forgo using Meta’s services altogether.

While some may argue that the likelihood of personal data being reconstructively output by AI is low, the mere potential for this scenario to unfold marks a significant privacy concern. The nuances of conversational snippets being drawn on for training AI is unsettling, particularly when users have no clear assurances regarding how their information might be repurposed down the line. Thus, the question remains: Is this an acceptable price for the convenience of immediate information?

For those wary of their existing DMs being leveraged by a corporate entity, the app provides some pragmatic strategies: refrain from activating the Meta AI feature in chats, delete sensitive conversations, or simply uninstall the app. However, these measures represent band-aid solutions to deeper issues surrounding user agency, consent, and data security. They beg for a broader conversation about the ethical responsibilities of tech giants like Meta in safeguarding user privacy in an era dominated by rapid AI advancements.

Ultimately, Meta’s foray into AI integration within personal communications can be seen as both innovative and intrusive. While the intention is to enhance user experience, it inadvertently complicates the landscape of digital privacy. Users must now navigate this realm with new caution and awareness, continuously weighing the convenience of AI features against the potential compromises in their privacy. As the implications of such technologies continue to unfold, open dialogue regarding user rights and consent is essential for a future where technological convenience does not come at the expense of personal security.

Leave a Reply