In the evolving landscape of artificial intelligence, the quest for enhanced accuracy and efficiency continues to drive innovation. A common anecdote illustrates this drive: how often have we encountered questions we could only partially answer? In such situations, reaching out to a knowledgeable friend can illuminate the path to a correct response. Similarly, this collaborative approach is essential in artificial intelligence, particularly in refining the performance of large language models (LLMs). The innovative methodology proposed by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) introduces a groundbreaking algorithm named Co-LLM, which facilitates seamless collaboration between different LLMs, enhancing their overall effectiveness.

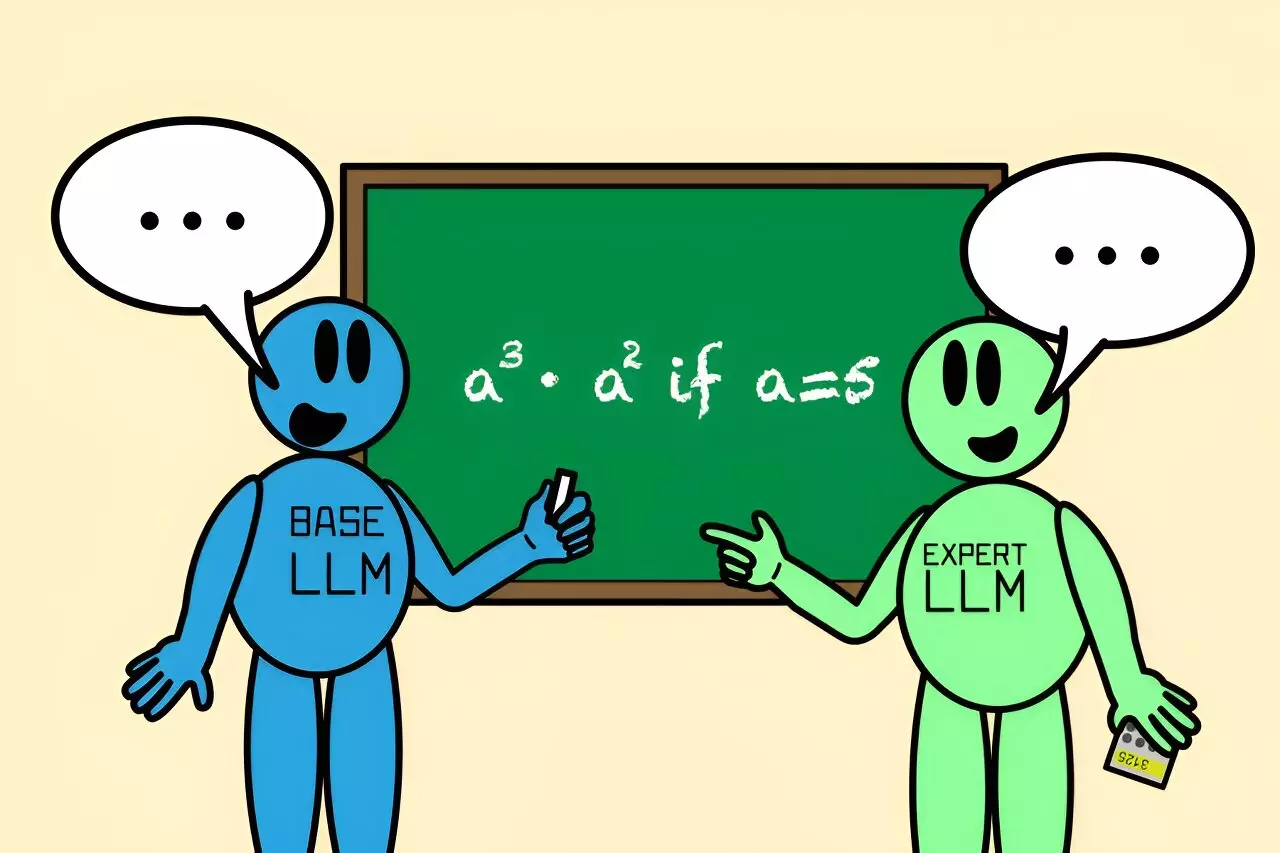

The essence of Co-LLM lies in its organic approach to LLM collaboration. Unlike traditional methods that heavily rely on complex algorithms or vast datasets, Co-LLM synergizes a general-purpose base model with more specialized counterparts. This pairing allows the general model to generate an answer while continuously monitoring its output. The algorithm intelligently assesses each word or token, calling upon the expert model when necessary, thus enhancing the reliability of the final answer produced. This not only leads to more accurate responses but also improves the efficiency of response generation.

As Co-LLM operates, it employs a sophisticated component known as the “switch variable.” This variable functions much like a project manager, discerning the areas where expertise is required. For example, when faced with questions about extinct bear species, the general-purpose LLM can initiate an answer, with the switch judiciously introducing superior inputs from an expert model, ensuring precise details, such as dates of extinction.

A noteworthy attribute of Co-LLM is its ability to teach the base model about the specific expertise of its specialized counterpart. By utilizing domain-specific datasets, it discerns and classifies which areas are particularly challenging for the general-purpose model to navigate. It then prompts the base model to activate the expert LLM when confronted with complex biomedical queries or intricate mathematical problems. This training process provides the scaffolding required for the general model to perform effectively, further illustrating the algorithm’s design aim of mirroring human collaboration.

For instance, if a user inquires about the ingredients of a specific prescription drug, the general-purpose LLM might struggle to provide a correct answer. However, when paired with a specialized biomedical model, the likelihood of generating an accurate and helpful response significantly increases, highlighting the algorithm’s utility in real-world applications.

The effectiveness of Co-LLM is demonstrated through various case studies. For example, when tasked with a mathematical problem like calculating (a^3 cdot a^2), where (a=5), a standard LLM might incorrectly compute the answer as 125. However, after being trained to collaborate with a specialized math-focused model like Llemma, they accurately determine the solution as 3,125. Such advancements in output accuracy illustrate that Co-LLM surpasses traditional methods where LLMs operate independently, resulting in superior performance in both simple and complex queries.

Moreover, one of the standout features of Co-LLM is its ability to alert users when certain aspects of an answer may require verification. This transparency is critical in applications where factual accuracy is paramount, further distinguishing Co-LLM from existing methodologies.

Future Directions and Imminent Improvements

While the potential of Co-LLM is already evident, researchers are exploring further enhancements. An intriguing possibility is the implementation of a more robust system that can backtrack when the expert model presents incorrect information, allowing Co-LLM to self-correct and provide a suitable response. This self-reflective mechanism resembles human-like error correction, an essential aspect when considering the reliability of AI systems.

Furthermore, the potential for Co-LLM to regularly update its knowledge base by training the general-purpose model on new information could revolutionize how LLMs operate. This continuous learning would keep the data fresh, allowing the model to remain pertinent and efficient in a fast-paced information environment, opening doors for applications in dynamic fields like healthcare and real-time information processing.

Co-LLM represents a significant leap forward in the collaborative capabilities of language models. By smartly integrating general-purpose and specialized LLMs, it fosters an environment of shared knowledge and expertise that mirrors human problem-solving dynamics. As researchers continue to refine and enhance this approach, the implications for various industries are profound. The methodology could transform not only how AI addresses complex inquiries but also how it evolves to meet the demands of an increasingly intricate world, thereby promising a new era of intelligence in artificial systems.

Leave a Reply