The artificial intelligence (AI) landscape is evolving rapidly, and language models are at the forefront of this transformation. The recent emergence of large language models (LLMs) has been nothing short of revolutionary. These models, led by, among others, OpenAI and Google, leverage hundreds of billions of parameters—think of them as the intricate gears and levers that drive their operations. The grand advantage of these vast systems lies in their ability to robustly analyze and learn from data. With extensive parameters, they can discern patterns and relationships that smaller models simply cannot manage. However, this comes with an inherent drawback: training these colossal models requires staggering computational resources and energy, raising concerns about sustainability and accessibility.

Interestingly, a seismic shift is occurring. Researchers from major tech companies and institutions are exploring the potential of small language models (SLMs). These compact powerhouses typically utilize several billion parameters, a fraction of what LLMs depend on. Significantly, small models are not designed for general purpose; rather, they excel in specialized tasks such as conducting specific customer interactions, summarizing data, or acting as virtual assistants in healthcare settings. Zico Kolter, a distinguished computer scientist, highlights that even a model with just 8 billion parameters can perform impressively sufficient for numerous applications. This creates a pathway to not only efficiency but also accessibility, as these models can operate seamlessly on mobile devices without requiring massive data centers.

The Cost Factor: A Double-Edged Sword

The price tag of creating and training these large models is astonishing, with reports indicating figures like $191 million for Google’s Gemini 1.0 Ultra model. The implications of this financial burden ripple through the AI ecosystem. The aspect of energy consumption is equally concerning; a simple query to models like ChatGPT consumes about ten times the energy of a traditional Google search. Not only do these models contribute to higher operational costs, but they also raise ethical questions about the environmental impact of AI.

In response, the trend toward smaller models feels not just inevitable but necessary. As organizations grapple with cost efficiency amid climate concerns, SLMs offer a viable alternative. With their ability to perform targeted tasks effectively, their adoption can lead to a more sustainable approach to AI development. The juxtaposition of LLMs and SLMs transforms the discussion from sheer size and capacity to one that incorporates fiscal responsibilities and ethical considerations. This paradigm shift may democratize AI applications, opening doors for smaller companies and independent creators who were previously excluded by high costs.

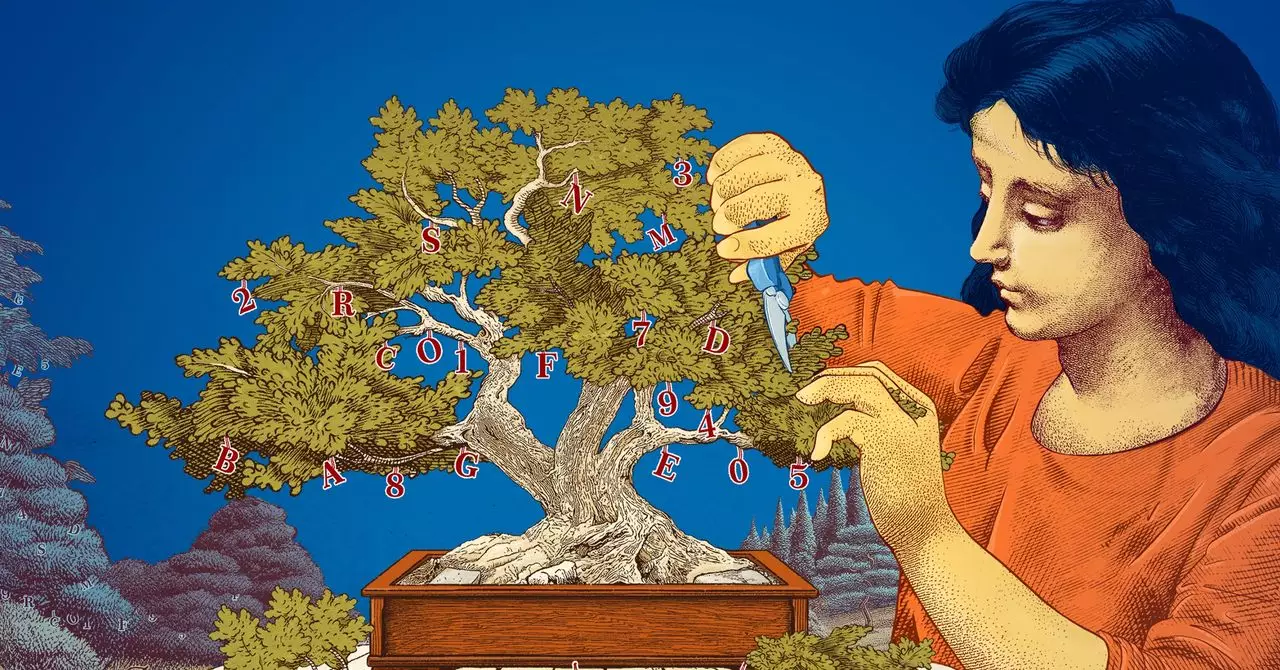

Innovative Strategies: Knowledge Distillation and Pruning

How do researchers optimize small language models for practical use without forgoing quality? Central to this discussion is the innovative process known as knowledge distillation. Essentially, the larger model acts as a mentor, generating high-quality training datasets from its wealth of information, which the smaller models can then effectively utilize. This curated approach contrasts sharply with the messy, often chaotic data gathered from the internet that is typically used to train vast models. The outcome? Small models that are incredibly adept despite having far fewer parameters.

Another fascinating technique involves pruning, where unnecessary components are systematically removed from a neural network. Inspired by the human brain’s intrinsic ability to optimize its synaptic connections over time, this method was notably advanced by computer scientist Yann LeCun, who posited that a significant portion of parameters could be discarded without hindering performance. Pruning enables small language models to be finely tuned for specific tasks, thus improving efficiency and effectiveness. This enables researchers to explore innovative ideas within a framework that encourages experimentation with reduced stakes, generating a fertile ground for new AI concepts.

The Future Beckons: SLMs as Accessible Multi-Taskers

The growing adoption of small language models signals a pivotal moment in the AI narrative. While LLMs will undoubtedly continue to play a crucial role in areas requiring immense complexity such as drug discovery and versatile chatbots, smaller models represent a democratization of AI capabilities. With streamlined operations and reduced training times, they promise significant savings in time and computational efforts.

These efficient models serve a dual purpose: they offer affordable solutions for businesses and individual developers alike, while also facilitating deeper research into AI’s workings and its implications. The increased transparency that smaller models provide allows researchers to craft and test their theories with less risk involved. In a landscape where innovation often comes at great expense, it is refreshing to discover that smaller, targeted models can deliver high-quality output without the extensive resource investment traditionally associated with artificial intelligence. As we navigate this rapidly changing environment, it’s clear that the future of AI may not rest solely with the giants, but with the nimble, efficient, and innovative small models that are rapidly gaining traction.

Leave a Reply