In an era where social media platforms face increasing scrutiny regarding content moderation, Threads, a microblogging platform owned by Meta, has boldly stepped up its game with the introduction of the Account Status feature. This new tool empowers users to have a clearer understanding of their interactions on the platform, shedding light on the often opaque processes of content moderation. By enabling users to see when their posts have been removed or demoted according to community standards, Threads demonstrates an impressive commitment to transparency and user empowerment.

Understanding Content Moderation

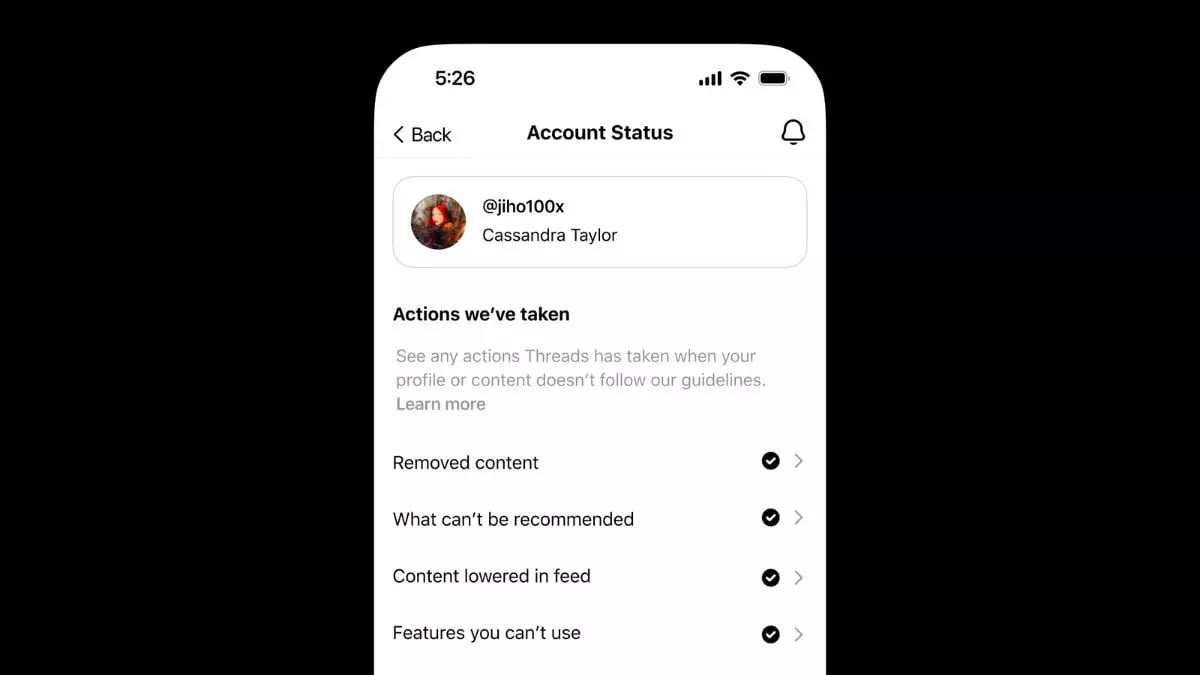

One of the most problematic aspects of social media today is the perplexity surrounding content moderation. Users often find themselves at the mercy of algorithms and guideline interpretations that change rapidly and without warning. Threads’ Account Status feature seeks to alleviate this uncertainty, giving users critical insights into their posts’ fates. Upon navigating to Settings > Account > Account Status, users gain access to a comprehensive view of actions that have been taken on any of their posts or replies that might contravene community standards. This newfound capability fosters a sense of ownership over one’s online presence, allowing users to confront moderation decisions more effectively.

The Power to Challenge Decisions

Perhaps the most empowering aspect of the Account Status feature is the ability for users to appeal decisions they deem unfair. Threads allows users to submit requests for review, underscoring the platform’s belief in a fair and nuanced moderation process. In a digital landscape where many feel devalued or unheard, providing a mechanism for users to contest moderation actions is a refreshing step. Users will receive notifications once their reports are reviewed, transforming the often passive experience of social media moderation into a more interactive and engaging dialogue.

Balancing Expression with Responsibility

While the introduction of the Account Status feature indicates a strong commitment to user engagement, Threads also emphasizes the delicate balance between free expression and responsibility. The platform acknowledges the importance of authenticity, dignity, privacy, and safety as guiding principles when applying community standards. This sensible approach is crucial, particularly given the complexities of moderating content that may carry intrinsic societal value. By allowing some posts to remain visible despite potential violations—if they are deemed newsworthy or in the public interest—Threads shows an understanding of context that many other platforms fail to achieve.

AI-Generated Content Under the Lens

Adding another layer to its community standards, Threads has made it clear that AI-generated content is not exempt from oversight. As the digital landscape continues to evolve, the rules that govern these platforms must adapt as well. The emphasis on treating all content equally reinforces a foundation built on fairness, while also addressing the unique challenges posed by artificial intelligence. This commitment to comprehensive moderation is essential in a world where misinformation can proliferate rapidly.

With its innovative Account Status feature, Threads has not only enhanced user transparency but also redefined the user’s role in their digital narrative. In focusing on accountability and responsiveness, the platform sets a new standard for how social media can foster healthier communication and self-governance among its users.

Leave a Reply