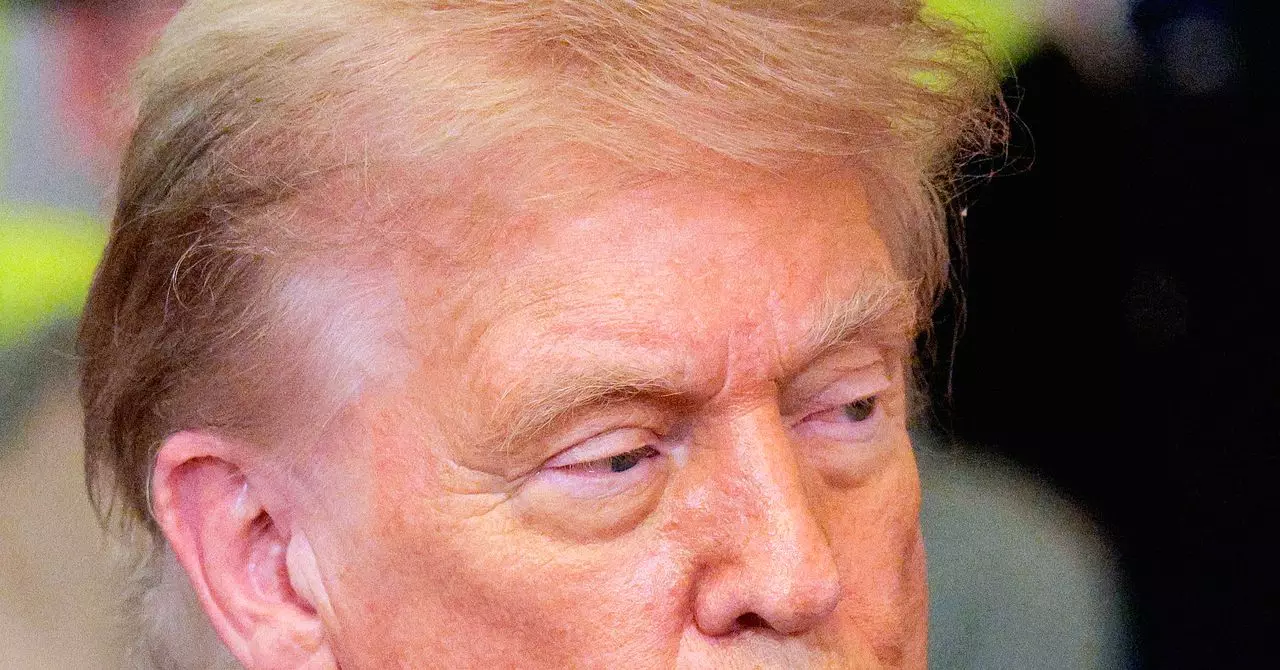

The ongoing congressional debate over President Donald Trump’s controversial AI moratorium provision reveals a deeper struggle about how America should regulate emerging technologies. Initially designed to impose a sweeping 10-year pause on state regulations of artificial intelligence, the moratorium aimed to create a nationwide uniformity, ostensibly to prevent a patchwork of conflicting rules that might stifle innovation. However, rather than achieving consensus, this provision has triggered widespread distrust across a broad spectrum of political and social groups—including state attorneys general, lawmakers from drastically different ideologies, labor unions, and advocacy organizations.

This conflict isn’t just about AI policy; it’s about who gets to control how AI is regulated and whose interests get prioritized. While proponents argue that a moratorium shields AI development from hasty regulation, critics rightly fear that it grants tech giants unchecked power for years, compromising safety, privacy, and equity in the process.

The Political Tug-of-War: Shifting Positions and Mixed Signals

The political machinations surrounding the AI moratorium illustrate a chaotic and occasionally contradictory legislative process. Senator Marsha Blackburn’s evolving stance typifies this uncertainty. Once opposed to the original moratorium, she collaborated with Senator Ted Cruz to propose a diluted five-year pause with specific exemptions, only to reject this compromise soon after.

This flip-flopping isn’t mere political theater; it reflects genuine tensions among legislators trying to reconcile competing priorities. Blackburn’s roots in Tennessee’s music industry explain her push to protect artists from AI-driven deepfakes—a legitimate concern about digital exploitation. Her efforts to carve out legal protections for images, voices, and likenesses highlight that AI’s impact isn’t abstract; it touches real human rights. Yet, by backing a moratorium that still restricts states’ abilities to regulate, she—and others—risk enabling Big Tech to operate largely without accountability during a critical window.

Why Exemptions Are Not Enough

The inclusion of exemptions—for child safety, deception, rights of publicity, and other sensitive areas—might seem promising at first glance. But these are overshadowed by language preventing these laws from imposing “undue or disproportionate burden” on AI systems. This clause effectively neuters the exemptions. Because AI permeates everything from social media feeds to automated decision-making, almost any regulatory effort could be argued as burdensome.

Senator Maria Cantwell’s observation that this equates to a “brand-new shield” against litigation and regulation hits the nail on the head. Instead of protecting citizens, the moratorium’s constraints grant tech companies a near-certain defense against accountability. Advocacy groups like Common Sense Media warn that this sweeping protection could undermine almost every attempt to enforce safety or privacy rules, especially those designed to protect vulnerable populations, such as children.

The Broader Consequences: Who Really Benefits?

The broad opposition—from labor unions concerned about federal overreach to far-right voices fearing unchecked corporate dominance—marks a rare consensus: the moratorium as currently framed fails to serve the public interest. In reality, it hands an extended grace period to Big Tech at a time when the risks of unregulated AI are escalating—from deepfakes to algorithmic bias, misinformation, and privacy violations.

By emphasizing a moratorium that delays state innovation in crafting AI safeguards, Congress seems to overlook the urgency of governing a technology that evolves rapidly and impacts virtually every aspect of society. Waiting five or ten years under weakened oversight isn’t a cautious approach—it is a political concession that sidelines citizens’ rights and safety to appease powerful industry players.

In this polarized environment, it’s clear the moratorium’s promises of balance and uniformity are more mirage than reality. Regulatory frameworks must not only enable innovation but prioritize robust protections—especially at the state level, where tailored solutions often emerge first. Any effort to impose a blanket pause on regulation risks jeopardizing that essential dynamic.

A Call for Clear, Strong, and Responsive AI Governance

The AI moratorium debate underscores the urgent need for a different mindset in Washington: one that embraces smart, iterative regulation rather than broad-brush freezes. AI’s complexities defy one-size-fits-all solutions. Instead, policymakers must craft adaptive rules that protect individuals—especially vulnerable groups like children and creators—from exploitation and harm.

Rather than shielding corporations, legislators should focus on transparency, accountability, and the rights of those affected by AI’s growing reach. This means rejecting moratoriums that serve as “get-out-of-jail-free cards” and endorsing proactive measures like the Kids Online Safety Act and comprehensive privacy frameworks.

What’s at stake is more than just legislative compromise—it’s the future of a technology that will define social and economic life for decades. If Congress fails to prioritize citizen protections now, the consequences could resonate far beyond the immediate political battles.

Leave a Reply