In a significant advancement for the field of artificial intelligence, Chinese AI startup DeepSeek has unveiled its latest model, DeepSeek-V3, which is poised to challenge conventional AI paradigms. This release signifies a notable stride in the open-source AI movement, positioning DeepSeek as a formidable contender against established industry giants like OpenAI and Anthropic. With 671 billion parameters and sophisticated architectural innovations, DeepSeek-V3 aims to bridge the gap between open-source and proprietary AI models while ultimately aspiring to reach the realm of artificial general intelligence (AGI).

Central to DeepSeek-V3’s design is its use of a mixture-of-experts (MoE) architecture, which allows it to activate only specific parameters tailored for individual tasks. This unique characteristic not only enhances its efficiency but also enables precise execution across various applications. When compared to models like Meta’s Llama 3.1, which contains 405 billion parameters, DeepSeek-V3’s performance is exceptionally impressive. Interestingly, it competes closely with the outputs of leading closed models, indicating that open-source technology is rapidly advancing to match, and in some instances surpass, its closed-source counterparts.

DeepSeek-V3 retains foundational elements seen in its predecessor, DeepSeek-V2, particularly the multi-head latent attention (MLA) architecture. However, the introduction of new techniques like the auxiliary loss-free load-balancing strategy enhances its capability to distribute tasks evenly among its expert networks without sacrificing overall performance. Additionally, the multi-token prediction (MTP) feature bolsters its efficiency, enabling the model to forecast multiple tokens concurrently. This leap in capabilities results in an astonishing output rate of up to 60 tokens per second.

Robust Training Methodology

DeepSeek-V3 underwent an extensive training regimen, utilizing a dataset comprising 14.8 trillion high-quality tokens. This rigorous pre-training phase is complemented by a two-stage context length extension, first extending the model’s context length to an impressive 32,000 tokens, then further amplifying it to 128,000 tokens. Such enhancements facilitate more nuanced understanding and generation of language, placing DeepSeek-V3 a step ahead in engaging with vast and complex textual contexts.

After pre-training, the model was refined through Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) strategies, aimed at aligning capabilities with human expectations and enhancing its general performance. Noteworthy is the optimization executed during training, employing advanced mixed-precision techniques and algorithms to reduce costs significantly. Estimates suggest that the total training expenditure was only around $5.57 million, a remarkable figure compared to the exorbitant investment required for other leading models—over $500 million in the case of Llama-3.1.

Upon testing, DeepSeek-V3 emerged as the most capable open-source model available, displaying superior performance in a variety of benchmarks, especially in Chinese language tasks and mathematical reasoning. It scored a remarkable 90.2 in the Math-500 benchmark, considerably ahead of Qwen’s 80—the closest competency in that category. However, it did face stiff competition from Anthropic’s Claude 3.5 Sonnet, which… managed to outscore it in specific tests, highlighting a competitive landscape between emerging open-source models and established closed-source offerings.

The development of DeepSeek-V3 reflects a pivotal moment for open-source AI; it assures diversity in the market and fosters innovation by counterbalancing the dominance of singular major players. This proliferation of options empowers enterprises and developers, enabling them to tailor AI solutions that best suit their unique needs.

Accessible AI: Open-Source for Everyone

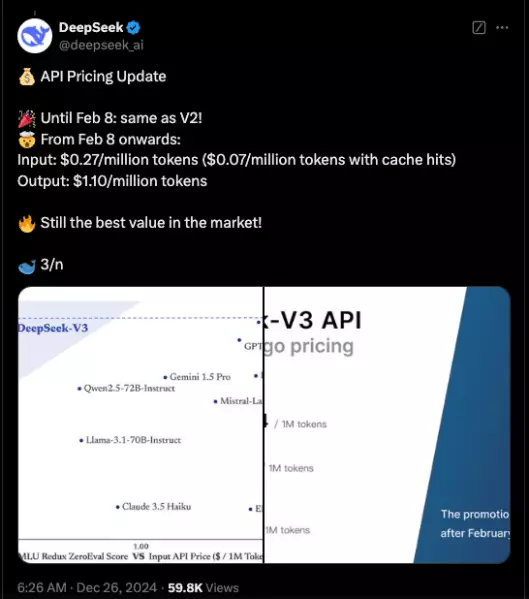

As part of the commitment to democratizing AI technology, DeepSeek has made its model publicly available through GitHub under an MIT license. This move not only facilitates experimentation and improvement within the community but also signifies a departure from the clandestine practices of many corporate entities. Additionally, commercial access via the DeepSeek Chat interface and API is being offered at competitive rates, ensuring that businesses can adopt state-of-the-art AI solutions cost-effectively.

DeepSeek-V3 represents a watershed moment for the open-source AI community. Through an innovative architecture, an astute training approach, and impressive performance metrics, it stands as a testament to the potential of collaborative and transparent AI development. The future of AI increasingly appears collaborative, and with models like DeepSeek-V3 leading the charge, it paves the way for a more dynamic and equitable technological landscape.

Leave a Reply