In an age where digital communication is increasingly scrutinized for privacy breaches, Signal, the well-regarded secure messaging platform, has just launched a new feature that reflects the growing concerns over data safety. Announced on a Wednesday, Signal’s new “Screen Security” feature aims to counteract Microsoft’s controversial Recall feature, which many view as an invasion of personal privacy. As Signal’s latest step to bolster its users’ confidentiality, it showcases the ongoing struggle tech companies face while navigating the complex landscape of privacy protection versus innovative features.

Signal’s decision to introduce Screen Security is rooted in necessity, as the company asserts that Microsoft’s Recall functionality left developers with insufficient tools to safeguard sensitive conversations from potential breaches. With Recall, Microsoft’s Copilot+ PCs employ an AI system that continuously tracks user activity by taking snapshots of screen content. While marketed as a helpful assistant for recalling past activities, it raises serious concerns about the unremitting monitoring that many users may not fully comprehend or realize until it is too late.

Defensive Mechanisms: The Introduction of Screen Security

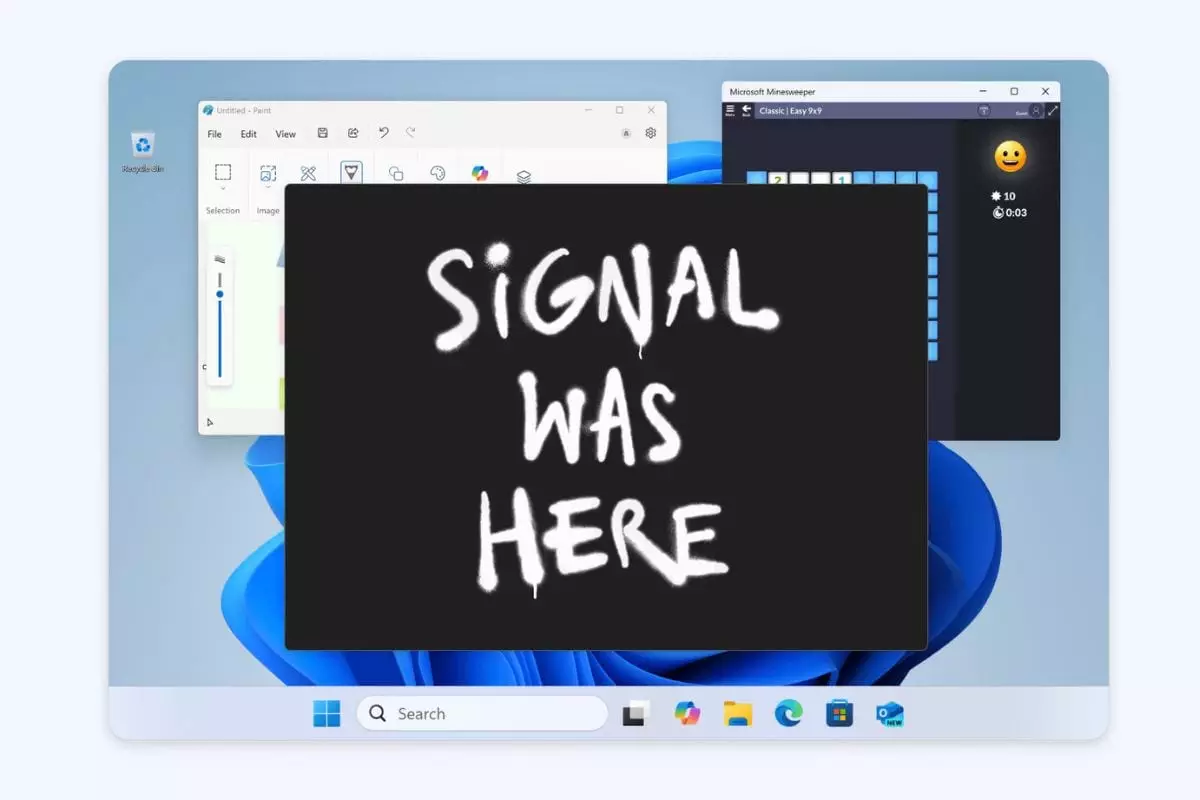

The ramifications of Microsoft’s Recall feature extend beyond the mere ethics of personal privacy. Users are subjected to a balanced tug-of-war between the allure of AI-assisted conveniences and the unsettling reality that their digital footprint is being recorded unconsciously. Signal’s Screen Security feature activates a DRM-like protocol, which essentially blocks any attempts to take screenshots of the app. This measure is reminiscent of content protection strategies used by streaming giants like Netflix, which prevent users from capturing visual content illicitly. By enforcing this measure by default for Windows 11 users, Signal is further emphasizing its commitment to privacy over convenience.

However, the introduction of new security features is not without its complications. Signal itself acknowledges that the Screen Security setting could impair accessibility for users relying on screen readers or magnification tools. This raises an important question: at what cost do we prioritize privacy? The answer is multifaceted and context-dependent. People with disabilities could find themselves at a disadvantage thanks to stringent privacy measures designed to protect the general populace, highlighting a need for more robust tools that do not compromise user experiences across the board.

Choosing Between Privacy and Accessibility

The reality remains that users are required to make choices about their privacy that may leave them vulnerable in other areas. Signal has implemented a warning feature that notifies users of the implications of disabling Screen Security, acknowledging the ongoing risk posed by Microsoft’s AI systems. The message is clear: “If disabled, this may allow Microsoft Windows to capture screenshots of Signal and use them for features that may not be private.” Such transparency is essential in the age of data integrity, where the lines between user convenience and digital safety often blur.

Despite the inherent complexities that come with balancing privacy and accessibility, Signal’s proactive measures stand as an assertion that users deserve uncompromised control over their messaging platforms. The company’s call for software creators to be more mindful is an implicit challenge to tech giants to rethink their design philosophy, ensuring it values user privacy in tandem with innovation. Their ambition to protect users from being pawns in the race for AI capabilities underscores a pivotal moment in the conversation surrounding technology ethics.

The Call for Responsible AI

Signal’s critique of Microsoft extends beyond mere complaint; it raises essential questions about the broader implications of AI in our daily lives. As we hurtle forward into a future increasingly dominated by intelligent systems capable of capturing and analyzing vast amounts of personal data, the need for responsible practices becomes paramount. Signal’s message to those developing AI functionalities such as Recall serves as a clarion call for a more nuanced approach to user privacy—one that integrates ethical considerations directly into the development process.

We find ourselves in a paradox where innovation fosters greater connectivity while posing new threats to individual privacy. Signal’s resolution through Screen Security illustrates a desperate yet necessary innovation in combating privacy infringement. As we continue to engage with technology designed to ease our lives, we must remain vigilant, advocating for more robust measures and solutions to uphold our right to privacy in an increasingly surveilled world.

Leave a Reply