In an era where digital landscapes are increasingly intertwined with adolescent lives, Meta’s ongoing commitment to safeguarding young users appears both commendable and complex. The latest updates reveal a deliberate effort to create an environment that is not only more secure but also more empowering, giving teenagers greater control over their online interactions. This push symbolizes a recognition that, to truly protect the vulnerable, social media giants must proactively implement tools that anticipate and counteract potential harm rather than merely react to it.

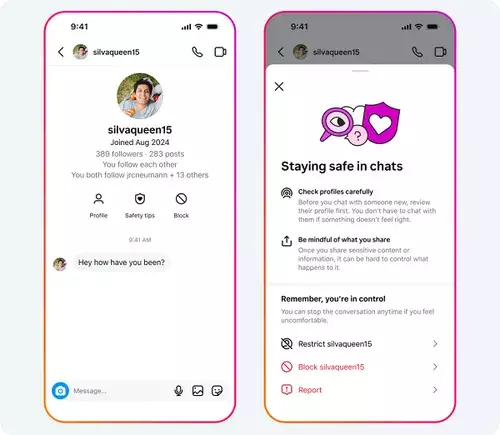

One of the most notable enhancements is the integration of “Safety Tips” prompts within Instagram chats. By offering immediate access to guidance on spotting scams and navigating complex social interactions, Meta is attempting to instill a sense of digital literacy directly within the user experience. This is a strategic move, acknowledging that awareness is a crucial first line of defense. However, it’s worth critiquing whether static tips can match the effectiveness of ongoing education and adult-led conversations about online safety.

Furthermore, Meta’s streamlined blocking and reporting systems demonstrate an effort to lower barriers that often hinder young users from defending themselves. The introduction of combined block-and-report options within direct messages indicates a recognition that convenience can lead to more decisive action. The fundamental assumption is that easier tools will lead to increased reporting and, consequently, swifter moderation. While this is a positive step, it also raises concerns about users over-relying on automation and whether the system might inadvertently suppress nuanced cases that require human judgment.

The platform’s focus on transparency about account age and origin offers users context that can help them assess potential threats. Knowing when an account was created might illuminate suspicious patterns, but it doesn’t account for the sophistication of modern digital deception, where malicious actors continuously adapt. It’s a reminder that technological solutions alone are insufficient—juvenile users need ongoing education and guidance to develop critical thinking skills.

Addressing the Dark Side: Combating Exploitation and Abuse

Meta’s proactive stance against account manipulation involving minors reveals an awareness of darker realities. The removal of tens of thousands of accounts involved in sexualized abuse and solicitation underscores the persistent threat posed by predators. The statistic of nearly 135,000 accounts taken down for inappropriate behavior against underage users is staggering, but it also prompts a sobering question: How effective are these removals if the problem persists at such scale?

Despite seemingly aggressive enforcement, the reality is that many abusive accounts operate in stealth, constantly rotating, disguising their identities, and exploiting platform vulnerabilities. The sheer number of suspicious accounts removed indicates that much of the problem remains hidden within the platform’s vast ecosystem. While Meta’s efforts are commendable, they also illuminate the urgent need for more sophisticated detection algorithms, perhaps powered by artificial intelligence, capable of identifying subtle signs of grooming or exploitation before they escalate.

Moreover, protective measures such as restricting who can contact younger users and limiting location data are steps in the right direction, but their success depends heavily on user compliance and ongoing vigilance. Teenagers, often unaware of subtle manipulations, can still fall prey to predators who exploit vulnerabilities deliberately designed into social media platforms. The challenge lies not only in removing offenders but also in cultivating a culture of safety and awareness among young users and their guardians.

Balancing Safety with Freedom: A Strategic Dichotomy

While Meta’s efforts are laudable, their motivations can be interpreted as both protective and strategic. Supporting legislation that raises the minimum age for social media access—potentially up to 16 or beyond—aligns with a broader societal push to shield minors from harmful content. Meta’s public endorsement of these policies suggests a willingness to adapt to regulatory pressures, yet it also underscores the potential for corporate interests to shape safety initiatives favoring platform moderation over user empowerment.

This raises the fundamental question: Are these measures enough, or do they merely serve as a veneer that masks deeper systemic issues? Increasing the minimum age could indeed reduce exposure risk for the most impressionable users. Still, it also risks alienating younger demographics or pushing them toward lesser-moderated alternatives. Truly effective protection would entail not just age restrictions but a comprehensive overhaul of platform design, foster genuine digital literacy, and create a dialogue that includes parents, educators, and youth.

Meta’s recent safety initiatives reflect ongoing battles in the digital arena—an attempt to strike a delicate balance between safeguarding vulnerability and allowing free expression. While technological solutions and policy support are necessary, they are insufficient without a broader cultural shift that emphasizes responsibility, education, and accountability. The platform’s future success in protecting its youngest users hinges on whether these tools can evolve from reactive measures into proactive, adaptive safeguards that genuinely empower rather than merely restrict.

Leave a Reply