As Meta, the tech behemoth formerly known as Facebook, navigates the complex landscape of digital privacy and security, it is once again leaning into facial recognition technology. This decision comes with a cocktail of interest and trepidation, especially after the company has faced considerable scrutiny over its previous use of such technology. This article explores the implications of Meta’s latest experiments in facial recognition, examining both its potential benefits and the ethical minefields it must traverse.

Meta is currently testing innovative approaches that employ facial recognition to combat the scourge of “celeb-bait” scams. Scammers have long exploited the likeness of celebrities to lure unsuspecting users into promotional traps, leading them towards fraudulent websites. In a bid to thwart this, Meta’s new system aims to compare faces featured in advertisements against established images of public figures found on their Facebook and Instagram profiles. If a match is detected, the company will verify the legitimacy of the ad before deciding whether to proceed with its display.

This enhanced facial matching process represents a proactive measure to protect both public figures and users, showcasing Meta’s intent to safeguard its ecosystem against malicious actors. However, the stark caveat here is Meta’s commitment to immediately deleting any facial data generated during this process. The assurance seeks to reassure users and privacy advocates that the data will not be retained, although skepticism remains prevalent regarding the broader implications of such technology.

In revisiting facial recognition, it’s crucial to understand Meta’s turbulent history with this technology. In 2021, the company ceased its facial recognition processes amid growing concerns about user privacy and potential misuse of personal data. As pressure mounted from various privacy advocates, Meta aimed to distance itself from its controversial reputation, a strategy reflected in its recent experimental approach. The previous backlash was fueled by concerns about how facial recognition could be weaponized, especially in ways already evidenced in countries like China, where such tools have been used for oppressive surveillance and discrimination.

The chilling potential of facial recognition technology raises significant ethical questions. Specifically, its ability to identify and target specific groups poses risks of infringing on personal liberties. The implications are made even more concerning given examples of its use in tracking certain populations and administering punitive measures for minor offenses. This legacy of misuse casts a long shadow over Meta as it attempts to re-enter this domain.

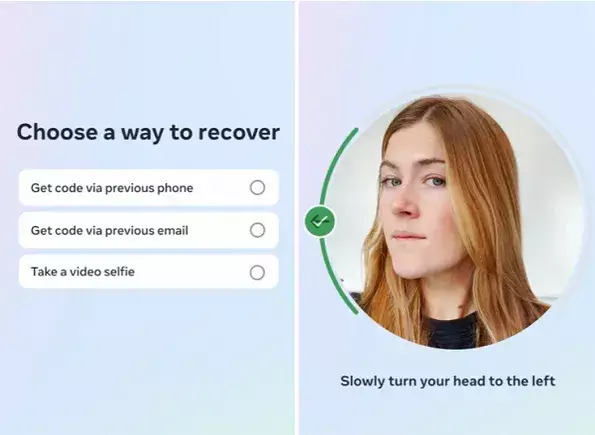

In addition to combating celeb-bait scams, Meta is also exploring the use of video selfies for identity verification purposes. Users attempting to regain access to compromised accounts would upload a video selfie, which would then be compared to existing profile pictures through facial recognition techniques. While this mirrors security measures already in widespread use across various mobile platforms, it still elicits questions about privacy and data security.

Meta maintains that these video selfies will not be visible to anyone else on the platform and that once verification is completed, the data will be deleted instantaneously. This commitment, while promising, still raises eyebrows in light of existing vulnerabilities in data storage and security infrastructures. Can users truly trust Meta to handle their biometric data responsibly? This skepticism is critical as the company digs deeper into the potential deployment of such technologies.

As Meta ventures further into facial recognition, the dichotomy of security and privacy must be balanced delicately. While these technologies can serve practical purposes, such as protecting user accounts and preventing fraud, they risk exacerbating existing issues related to surveillance and data breach. It remains imperative that the company establishes transparent policies regarding the handling, storage, and utilization of biometric data.

Moreover, regulatory scrutiny looms large over Meta’s initiatives. Given its past missteps and the intricate web of ethical considerations surrounding facial recognition, the company must tread carefully. A failure to address these concerns adequately could trigger a backlash that not only hinders its security efforts but also erodes trust among its user base.

While Meta’s experiments in facial recognition present significant opportunities to enhance security measures, they also serve as a reminder of the delicate interplay between technological advancement and ethical responsibility. The company appears eager to find a way forward, balancing the utility of facial recognition against the imperative of protecting user privacy. Ultimately, whether Meta can navigate these waters successfully remains to be seen, but the stakes have never been higher.

Leave a Reply