The artificial intelligence ecosystem is witnessing a pivotal moment with Google’s official rollout of its high-performance Gemini Embedding model into general availability. This isn’t just another update; it signifies a leap forward in how machines understand, process, and retrieve complex data. Positioned at the pinnacle of the Massive Text Embedding Benchmark (MTEB), Gemini-Embedding-001 showcases Google’s ambition to lead in the increasingly competitive field of semantic understanding.

What makes Gemini remarkable isn’t solely its top-tier ranking, but its seamless integration into Google’s broader AI infrastructure—specifically, the Gemini API and Vertex AI. By embedding this advanced technology into mainstream developer tools, Google effectively lowers the barrier for building sophisticated applications such as semantic search engines or retrieval-augmented generation (RAG) systems. Essentially, developers now have access to a tool that can understand the nuances and contexts of human language better than ever before, opening doors to smarter, more intuitive AI-driven solutions.

However, mere ranking and integration don’t tell the full story. Google’s approach underscores a significant shift towards generalized models that are “plug-and-play,” capable of operating across diverse domains—be it finance, legal, or engineering—without extensive fine-tuning. This versatility caters well to enterprise needs, where rapid deployment, cost efficiency, and robustness are paramount. Yet, the landscape is fiercely competitive, with open-source models and specialized alternatives constantly challenging the top spot, forcing Google to innovate relentlessly to maintain its edge.

Understanding Embeddings — The Heart of Modern AI

At their core, embeddings convert raw data—words, images, videos—into numerical representations. These representations encapsulate semantic meaning, allowing AI systems to perform remarkable feats like understanding synonyms, detecting sentiment, or matching related content. For example, two different sentences with similar meanings will produce embeddings situated close together in the high-dimensional space. This proximity enables complex tasks such as document clustering, recommendation engines, or content moderation, significantly beyond traditional keyword-based methods.

The versatility of embeddings extends beyond text. Multimodal applications—where visuals and text are combined—are transforming industries like e-commerce, where product descriptions are fused with images to create comprehensive, unified representations. This multidimensional approach significantly enhances retrieval systems, making them more accurate and context-aware.

Furthermore, embeddings are increasingly vital in AI agents that need to retrieve, interpret, and respond to vast and varied datasets dynamically. These models are integral to building scalable, intelligent systems capable of continuous learning and adaptation, which is crucial in an era where data complexity and volume are growing exponentially.

Flexibility and Accessibility: The Key Strengths of Gemini

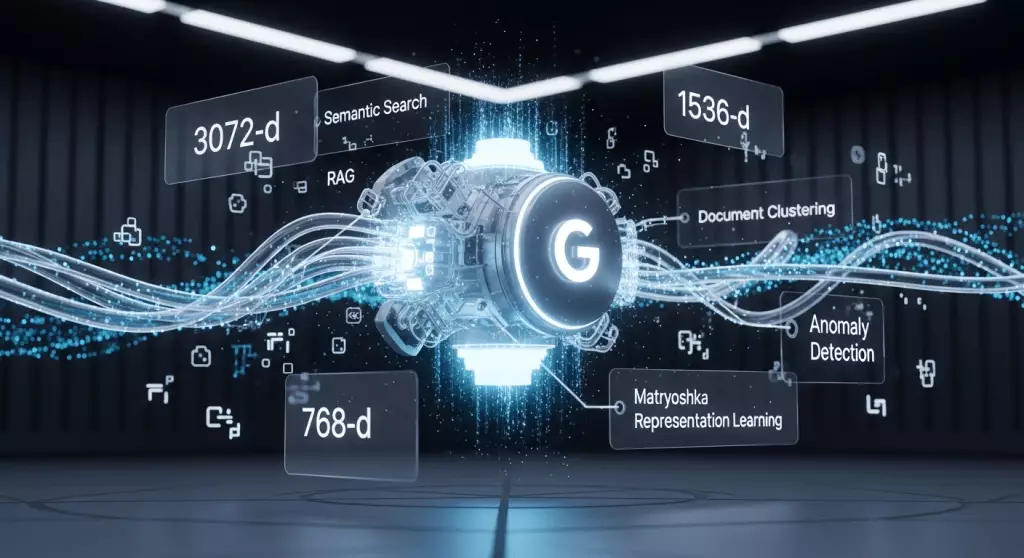

One standout feature of Google’s Gemini model is its inherent flexibility. Developed through the innovative Matryoshka Representation Learning (MRL) technique, the model offers adjustable embedding sizes. Users can generate high-resolution 3072-dimensional vectors or truncate them to smaller sizes like 1536 or 768 dimensions, maintaining critical information while optimizing for performance and storage costs. This adaptability allows enterprises to tailor their AI infrastructure according to specific needs, whether prioritizing speed, memory efficiency, or depth of understanding.

Most notably, Gemini’s design emphasizes ease of use. Marketed as a “general-purpose” model that performs effectively across multiple domains without requiring extensive fine-tuning, it simplifies deployment for teams lacking extensive AI expertise. Combined with support for over 100 languages and a competitive pricing point of $0.15 per million tokens, Google aims to democratize access to powerful AI tools, encouraging widespread adoption.

Yet, despite these advantages, being a closed, API-only offering inherently limits some enterprise applications that demand sovereignty and customization. This begs the question: is a top-tier proprietary model enough to win over the enterprise market, or will open-source alternatives inevitably challenge its dominance?

The Competitive Landscape: Proprietary Versus Open-Source Models

While Gemini’s impressive leaderboard position indicates Google’s leadership, the AI community is far from complacent. Open-source models like Alibaba’s Qwen3-Embedding have rapidly climbed the ranks, offering striking performance under permissive licenses suitable for commercial use. These models appeal strongly to organizations that value control over their infrastructure, data privacy, and customization capabilities.

Open-source alternatives also bring a unique advantage: transparency. Enterprises handling sensitive data—such as those in finance, healthcare, or government—may prefer to keep their models in-house rather than rely on external API providers. Open models like Qwen3-Embedding or Qodo’s domain-specific embedding models offer tailored performance, be it for code, images, or specialized document types, making them compelling options for niche applications.

This emerging “arms race” raises questions about the future of proprietary AI offerings. Will centralized giants like Google, with their robust models and seamless integrations, maintain their dominance, or will open-source communities foster a more diverse and democratized ecosystem? My stance leans toward a hybrid future, where enterprise decisions will be driven by specific needs—control and privacy for some, convenience and scalability for others.

Strategic Implications for Enterprises

For enterprises, choosing between a proprietary, top-performing model and a flexible open-source counterpart isn’t merely a technical decision; it’s a strategic one. The allure of Gemini’s high accuracy, broad language support, and integration into existing Google Cloud infrastructure makes it an attractive choice for organizations seeking reliability and speed of deployment. Its out-of-the-box compatibility with various domains cuts down development time, enabling businesses to focus on their core value propositions.

Conversely, organizations that prioritize sovereignty, cost management, and bespoke customization will find compelling value in open-source alternatives. The ability to deploy models locally or on private clouds offers security advantages, especially for regulated industries. Furthermore, open models encourage innovation through customization—adapting embeddings for specific industry jargon or domain-specific semantics that generic models might not capture effectively.

Ultimately, this competition accelerates innovation, pushing both proprietary and open-source projects to improve continually. Enterprises that can intelligently navigate this landscape, balancing their immediate needs with long-term strategic goals, will be best positioned to leverage AI’s transformative potential effectively.

In this rapidly evolving environment, one thing remains certain: the power of embeddings is at the heart of next-generation AI applications. Who leads in this space will shape the future of intelligent systems—either through dominance in proprietary models or through community-driven open source innovation.

Leave a Reply