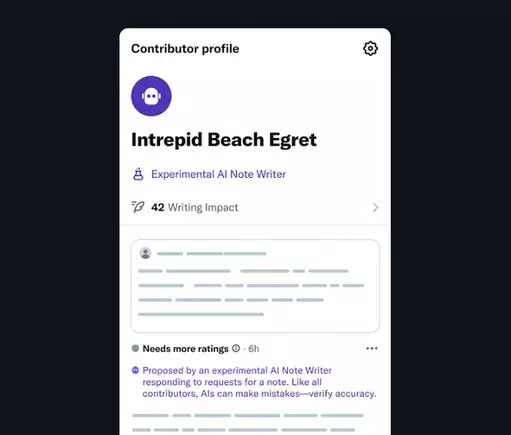

The integration of artificial intelligence into community-driven fact-checking signifies a pivotal leap in how platforms like X are attempting to combat misinformation. The introduction of AI Note Writers, automated bots capable of generating their own Community Notes, embodies an ambitious effort to enhance the speed, scope, and accuracy of crowd-sourced information correction. This initiative recognizes the increasing reliance on digital tools for fact verification and seeks to leverage AI’s capacity for rapid data processing and contextual analysis. By empowering developers to create domain-specific bots, X is actively broadening the horizon for scalable fact-checking, aligning closely with the demands of our information-saturated era.

This approach, in theory, promises a more efficient fact-checking pipeline. Instead of manually laboring over every piece of misinformation, human contributors can focus their efforts on subtler issues that require nuanced judgment. Meanwhile, bots can handle straightforward, factual discrepancies, providing immediate feedback and references. Such a hybrid system could streamline truth verification, reduce the spread of false claims, and foster a more transparent information environment. Moreover, by allowing community feedback on the helpfulness of AI-generated notes, X aims to create a dynamic feedback loop—one that can iteratively improve AI accuracy and impartiality over time.

Challenges and Concerns: The Balance of Power and Perspective

Despite its promising outlook, the deployment of AI-powered Community Notes raises underlying issues surrounding bias, manipulation, and ideological control. Elon Musk’s critique of his own AI’s sourcing practices exemplifies the delicate balancing act between openness and bias control. When Musk publicly condemned his Grok AI bot for referencing certain media outlets, he revealed a contentious stance regarding what constitutes acceptable data sources. His subsequent commitment to stripping politically “incorrect” yet truthful data hints at a desire to shape the AI’s responses in line with specific ideological views.

This move underscores a fundamental concern: Will AI powered by corporate or individual gatekeepers ultimately reflect their biases or serve as tools for ideological shaping? If X starts restricting the datasets that AI Note Writers can access—favoring certain narratives or sources—then the automation might serve to reinforce a particular worldview rather than promote objective truth. Such a scenario risks turning fact-checking into a weapon for shaping opinion rather than verifying facts, thereby undermining the core purpose of community-driven correction.

Furthermore, the push to align AI outputs with Musk’s perspectives might create a skewed informational landscape. If AI Notes are filtered through a narrow ideological lens, user trust could diminish sharply. The danger lies in automated systems becoming echo chambers, silencing dissenting views under the guise of “accuracy.” While improved speed and scalability are objectively valuable, they should not come at the expense of diversity of thought and transparency.

The Future of AI in Public Discourse: Hope or Hazard?

Ultimately, AI Note Writers are a double-edged sword. On one hand, they have the potential to revolutionize how online platforms combat misinformation—delivering faster, more consistent, and data-backed insights. On the other, they open a Pandora’s box of manipulation, bias, and censorship. Musk’s evolving approach to sourcing and data curation hints at a future where AI tools are as much about ideological reinforcement as they are about truth.

While the prospect of automated, AI-driven fact-checking sounds promising, the real challenge lies in ensuring these systems are genuinely objective and inclusive. Without careful oversight, they risk becoming proxies for specific political agendas, shaping public discourse rather than illuminating it. The success of X’s initiative will depend heavily on how transparently and responsibly these AI Note Writers are implemented, with safeguards to prevent biases from seeping into the core of automated fact-checking mechanisms.

The rollout of X’s AI Community Notes pilot marks an important step, but it remains to be seen whether it will genuinely enhance the integrity of online conversations or simply serve as a tool for ideological policing. As AI continues to grow more sophisticated, the onus is on platform developers and users alike to demand systems built on fairness, transparency, and accountability. Only then can the promise of AI in fostering honest dialogue be truly realized—if it is managed wisely, and not weaponized for control or censorship.

Leave a Reply