The realm of robotics has taken an extraordinary leap forward with the innovative work of a team at the German Aerospace Center’s Institute of Robotics and Mechatronics. Their cutting-edge approach to endowing robots with a sense of touch, diverging from traditional methods that rely heavily on artificial skin, showcases the transformative potential of combining internal sensors with machine learning. In a world poised at the brink of human-robot interaction, this research is a pivotal movement towards creating responsive and adaptive machines that can intuitively understand and respond to their environment.

Beyond Artificial Skin: Emulating Touch

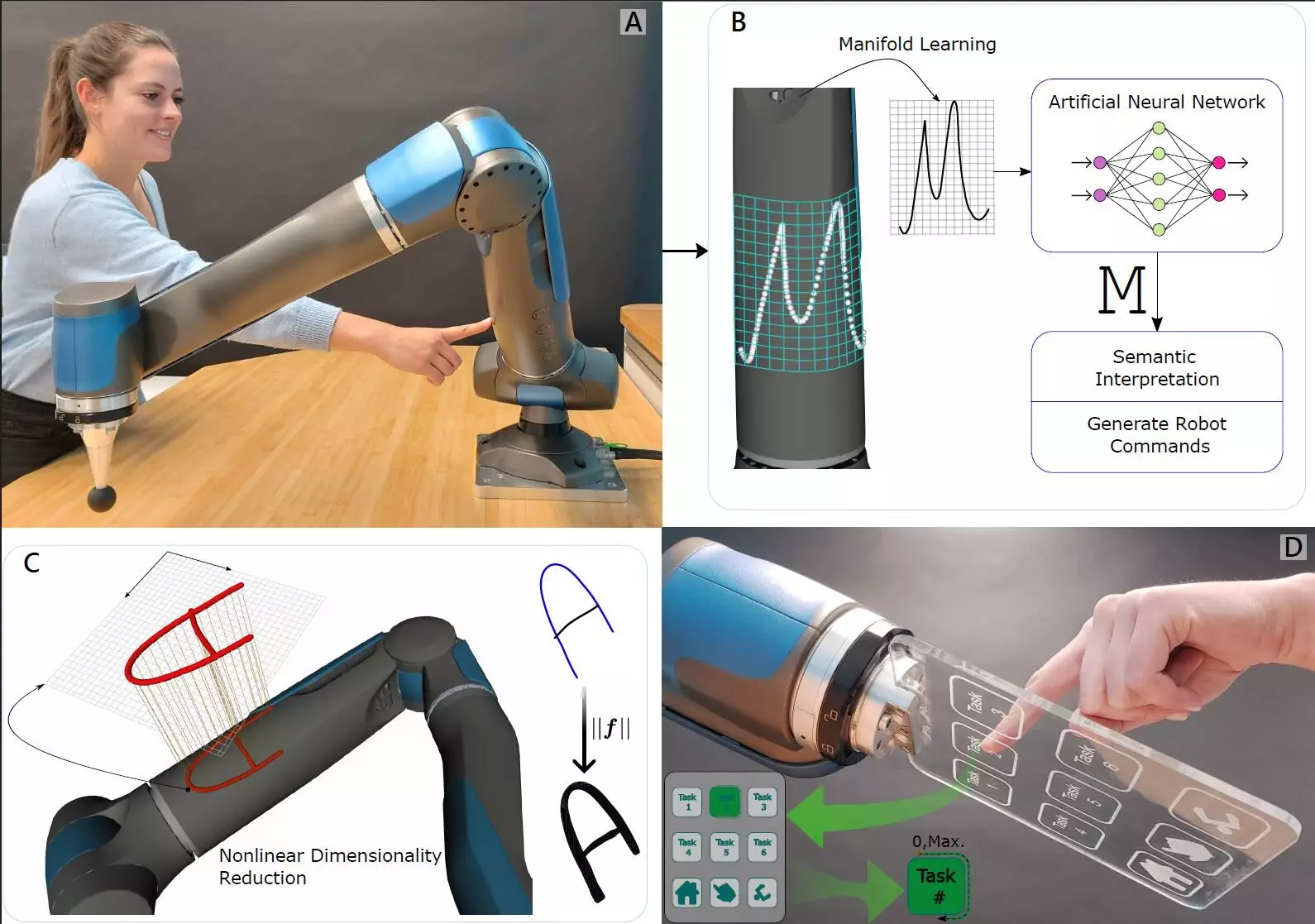

Typical robots often mimic human-like sensations using expansive and complex artificial skins, which, while effective, can be cumbersome and costly. However, this new study disrupts the status quo by focusing on the inherent capabilities of force-torque sensors embedded within robotic joints. By concentrating on the torque and pressure perceived internally, the researchers have revealed a simplified pathway to achieving touch through the robot’s own mechanisms. This ingenious method mirrors the biological experience of touch, essentially turning the robot’s responsiveness into a purely mechanical interpretation of tactile interaction.

Training Robots through Machine Learning

Central to this breakthrough is the integration of a sophisticated machine-learning algorithm designed to interpret the data received from the internal sensors. This combination allows the robot to comprehend various touch dynamics, identifying distinct interactions based on the pressure exerted on its joints. Such advanced functionality provides the robot with an articulated language of touch—one that distinguishes not just the sensation of being touched but also allows it to navigate and interact in diverse environments.

For instance, a robot arm newly equipped with this technology can discern the exact location and intensity of a touch, enabling a fascinating breadth of applications from manufacturing to personal assistance. Imagine a robot capable of adapting its movements in response to human presence, ensuring safety and collaboration in industrial settings where close interaction is routine.

Implications for Future Interactions

The ramifications of this research extend far beyond mere mechanical advancements. The ability to interpret touch could redefine robotic interaction paradigms, fostering a deeper integration of robots into societal frameworks. As robots become more aware of their surroundings through this improved touch feedback, industries could revolutionize workflows, enhance safety protocols, and ultimately create more efficient human-robot teamwork.

In light of this promising research, there’s a fundamental question: how long until we see widespread adoption of such technologies? As the boundaries between human and robotic capabilities steadily blur, embracing such innovations may herald a new era of understanding and cooperation, bringing a wealth of opportunity in fields like healthcare, manufacturing, and beyond.

This remarkable synergy of internal sensory technologies and machine learning is not just a stride in robotics; it is a reiteration of the profound possibilities that arise when we marry advanced computational techniques with fundamental human experiences such as touch. The future of robotics is not merely about building machines—it is about crafting companions that can understand, respond to, and effectively communicate with the world around them.

Leave a Reply