Deep learning, a subset of artificial intelligence (AI), is making its mark across various sectors, including healthcare and finance. As the demand for these advanced models escalates, the computational requirements become daunting, often necessitating cloud-based solutions. However, this reliance brings serious security and privacy concerns, especially in sensitive fields such as healthcare. In response to these challenges, researchers at the Massachusetts Institute of Technology (MIT) have pioneered an innovative approach harnessing the principles of quantum mechanics to enhance data security while performing deep-learning tasks in cloud environments.

With increasing amounts of data collected in healthcare settings, the potential for AI-driven insights is immense. Hospitals could use deep learning to analyze medical images and provide rapid diagnoses, potentially revolutionizing patient care. However, the nature of the data involved—often confidential patient information—creates a hesitancy among healthcare providers to embrace cloud-based AI solutions. Concerns about unauthorized data access and breaches compound the fears emanating from privacy regulations like HIPAA, which stipulate strict guidelines regarding patient information.

Mitigating these concerns is critical. Traditional encryption methods, while helpful, may not provide sufficient protection in the complex landscape of cloud computing. As such, the need for a more robust solution is urgent. This is where MIT’s research comes into play, offering a novel security protocol that employs quantum properties to ensure the privacy of data transmitted to and from cloud servers.

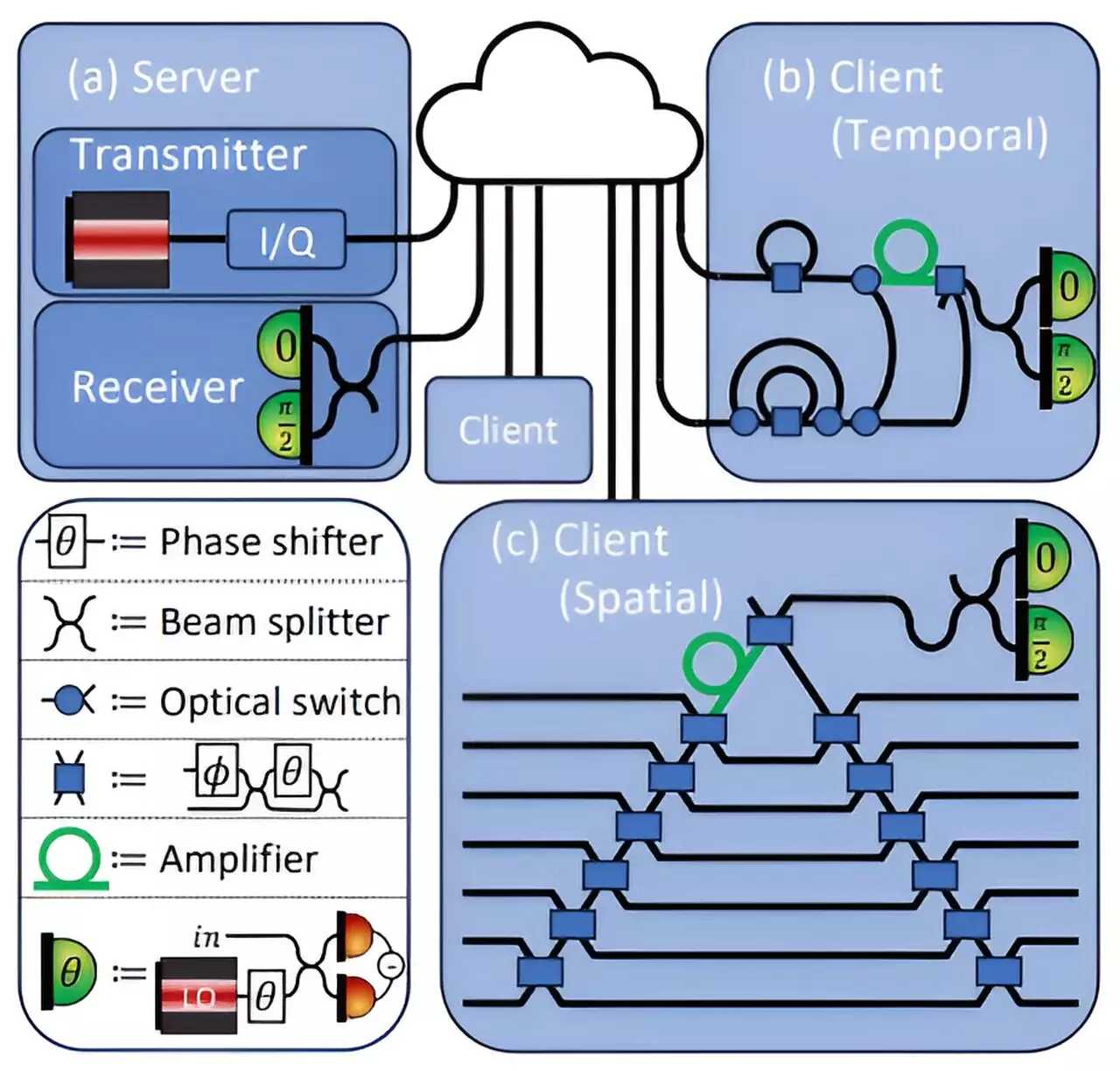

The MIT research team’s protocol leverages the fundamental principle of quantum mechanics known as the no-cloning theorem. According to this principle, it is impossible to create an identical copy of an unknown quantum state. By effectively utilizing this characteristic, the researchers developed a system where data transmitted through optical fibers can remain confidential, even during complex deep-learning operations.

In practical terms, this means that patient data, such as medical images, can be encoded into laser light signals. Through this method, data does not merely traverse the network; it is transformed into an inseparable quantum state, making any attempt at interception futile. If someone tries to copy or intercept the data, the encoding would be disrupted, alerting both the client and the server to a security breach.

The unique feature of this security protocol is its dual ability to protect both the client’s sensitive data and the proprietary algorithms held by cloud service providers, such as those employed in deep neural networks. In the study, the researchers created a scenario where a client—a healthcare provider with confidential medical images—could utilize a deep learning model hosted on a central server without compromising patient information or revealing critical parts of the model.

In this setup, the server transmits encoded neural network weights to the client, who then applies computations based on their own data. The clever design of the protocol ensures that the server and client can interact securely while maintaining the confidentiality of the healthcare data involved. Furthermore, the quantum nature of the system ensures that any unauthorized attempt to glean insights from the transmission results in noticeable disruptions, allowing for real-time security checks.

Testing of this protocol revealed that it could maintain a remarkable 96% accuracy in predictions while implementing robust security features. The implications of this are far-reaching; healthcare providers can leverage powerful AI tools without fear of breaching patient confidentiality or revealing crucial aspects of the machine learning models they employ. The researchers reported that even minimal “leaks” from the client’s side would yield less than 10% of the information required for an adversary to reconstruct any damage, making it a fortifying solution in data protection.

This breakthrough not only solidifies data privacy standards in cloud-based AI applications but also sets the stage for future advancements. The team plans to explore applications of this protocol in federated learning, a system that supports training models across multiple decentralized devices while keeping data localized. Such exploration could further enhance both security and efficiency within various data-intensive industries.

As deep learning models continue to evolve and integrate into critical sectors, the balance between operational capacity and data security is paramount. The groundbreaking work by MIT researchers demonstrates that it is indeed possible to achieve this delicate equilibrium through quantum innovations. By harnessing the complexities of quantum mechanics, they present a compelling case for the future of secure cloud-based computations, paving the way for safer utilization of AI in healthcare and beyond. This research has not only opened doors for practical security solutions but has also inspired a sense of optimism about the potential for technology to resolve some of the most pressing challenges facing data privacy today.

Leave a Reply