Human-robot imitation learning holds great promise for the development of robots that can closely mimic human actions and movements in real-time. However, despite significant advancements in imitation learning techniques in recent years, there are still challenges that need to be addressed. One of the main hurdles is the lack of correspondence between a robot’s body and that of its human user. This disconnect can significantly impact the performance of imitation learning algorithms, limiting the robot’s ability to accurately replicate human motions.

The Introduction of a New Deep Learning Model

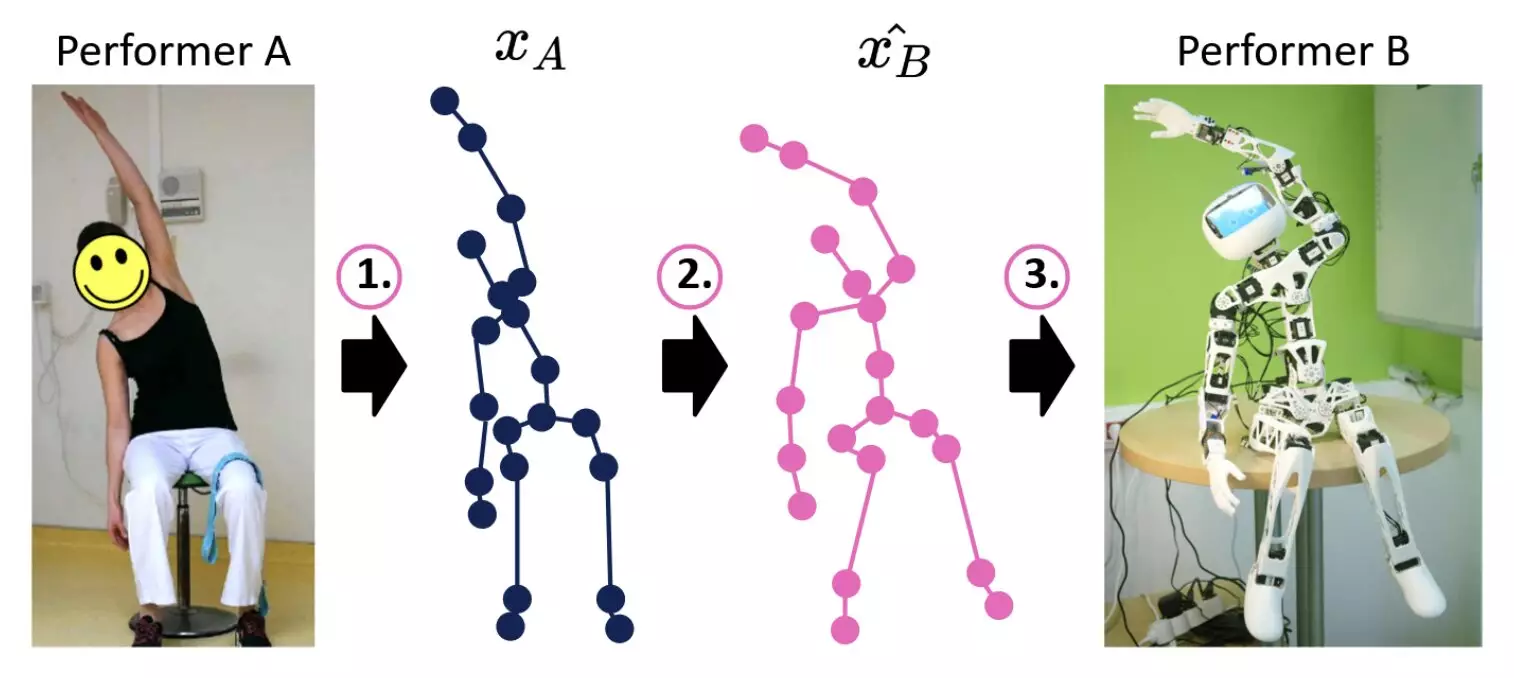

Recently, researchers at U2IS, ENSTA Paris introduced a new deep learning-based model aimed at improving the motion imitation capabilities of humanoid robotic systems. The model, detailed in a paper pre-published on arXiv, approaches motion imitation in three distinct steps to mitigate the human-robot correspondence issues that have plagued previous attempts. By focusing on translating sequences of joint positions from human motions to motions achievable by a specific robot, the model leverages deep learning techniques to enhance online human-robot imitation.

The model developed by Louis Annabi, Ziqi Ma, and Sao Mai Nguyen breaks down the human-robot imitation process into three key steps: pose estimation, motion retargeting, and robot control. Initially, pose estimation algorithms are used to predict sequences of skeleton-joint positions representing human movements. These predicted positions are then translated into joint positions that align with the robot’s physical capabilities. Finally, the translated sequences are utilized to plan the robot’s motions, aiming to enable dynamic movements that facilitate task completion.

In preliminary tests, Annabi, Ma, and Nguyen compared their deep learning-based model to a simpler method for reproducing joint orientations that does not utilize deep learning. Unfortunately, the results did not meet their expectations, indicating that current deep learning methods may face challenges in real-time motion retargeting. The researchers acknowledge the need for further experimentation to identify and address potential issues with their approach, in hopes of enhancing the model’s effectiveness.

The Road Ahead: Challenges and Opportunities

While unsupervised deep learning techniques show promise for enabling imitation learning in robots, the researchers acknowledge that there is still room for improvement. Future work will focus on three primary areas: investigating the shortcomings of the current method, creating a dataset of paired motion data for improved training, and refining the model architecture to enhance retargeting accuracy. By addressing these challenges and opportunities, the researchers aim to overcome the limitations of current deep learning methods and optimize human-robot imitation learning for practical applications.

Leave a Reply