Nvidia’s CEO Jensen Huang recently revealed that the company’s next-generation graphics processor for artificial intelligence, known as Blackwell, will come with a hefty price tag of between $30,000 and $40,000 per unit. This new chip is expected to revolutionize the AI industry, but the high cost raises some eyebrows.

According to Huang, Nvidia had to develop new technology to bring the Blackwell chip to life, with an estimated research and development cost of around $10 billion. This investment underscores the company’s commitment to pushing the boundaries of AI technology, but it also raises questions about accessibility.

The price range of $30,000 to $40,000 per unit puts the Blackwell chip in a similar price bracket to its predecessor, the H100, from the “Hopper” generation. Analyst estimates suggest that the demand for these high-performance AI chips remains strong, despite the significant price increase from the previous generation.

The introduction of the Hopper generation in 2022 marked a turning point for Nvidia’s AI chips, driving a surge in quarterly sales and attracting major players in the AI industry. Companies like Meta have been investing heavily in Nvidia’s AI GPUs for training their AI models, signaling the pivotal role these chips play in the development of cutting-edge AI applications.

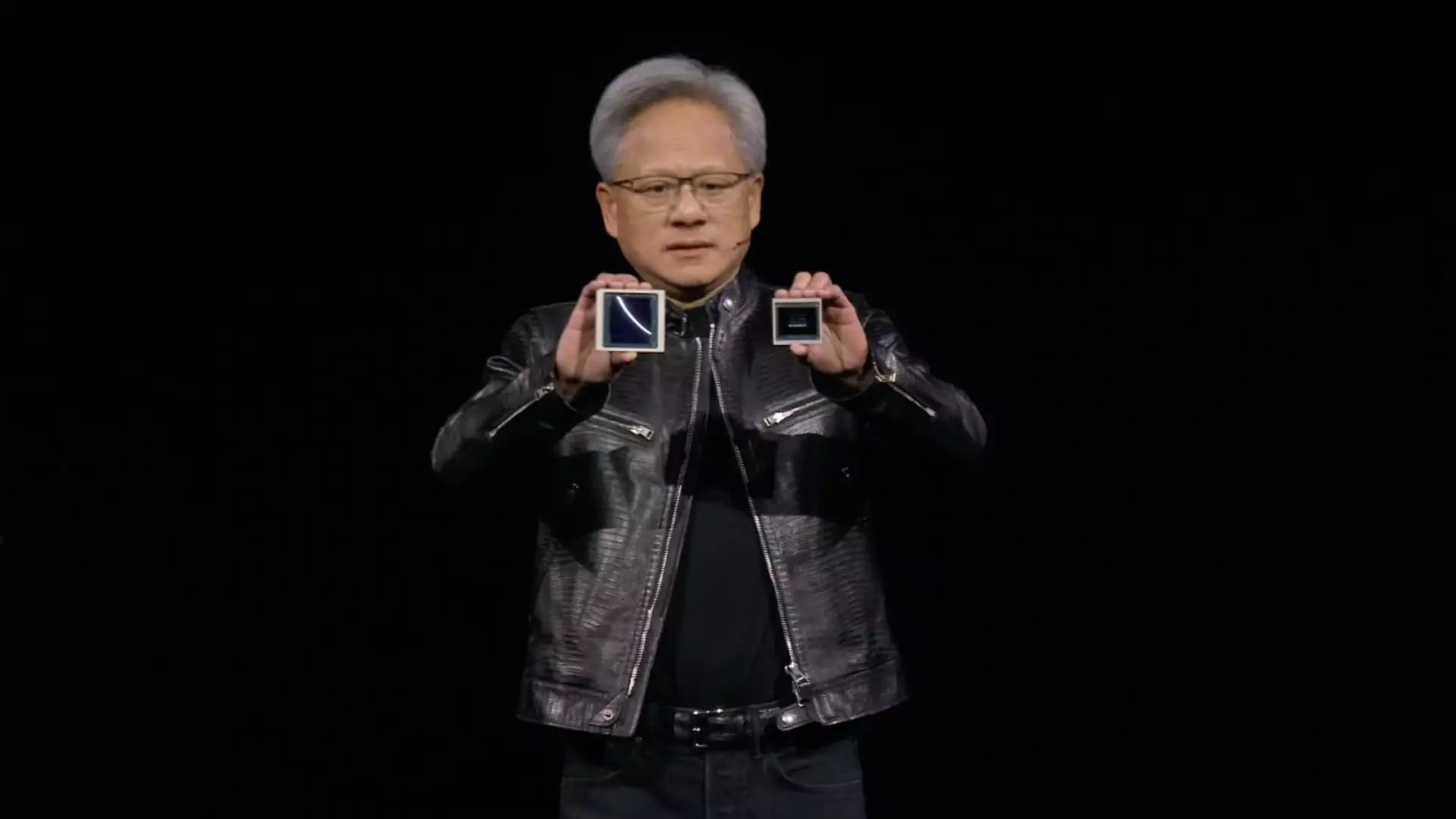

The Blackwell chip represents a significant leap forward in terms of performance and energy efficiency, combining two chips in a physically larger form factor compared to its predecessors. This innovation is expected to fuel further advancements in AI technology and solidify Nvidia’s position as a leader in the AI hardware market.

While Nvidia does not disclose the list price for its chips, the actual cost for end consumers like Meta or Microsoft can vary depending on factors such as volume discounts and purchasing through third-party vendors. Some AI servers are equipped with multiple GPUs, reflecting the growing demand for high-performance computing solutions in the AI sector.

Nvidia’s announcement of multiple versions of the Blackwell AI accelerator, including the B100, B200, and GB200 models, underscores the company’s commitment to delivering cutting-edge AI solutions to its customers. These new chips are expected to be available later this year and are set to redefine the capabilities of AI hardware technology.

While the cost of Nvidia’s Blackwell chip may raise concerns about accessibility and affordability, its technological advancements and market impact cannot be overlooked. As the demand for high-performance AI hardware continues to grow, Nvidia remains at the forefront of innovation in the AI industry.

Leave a Reply