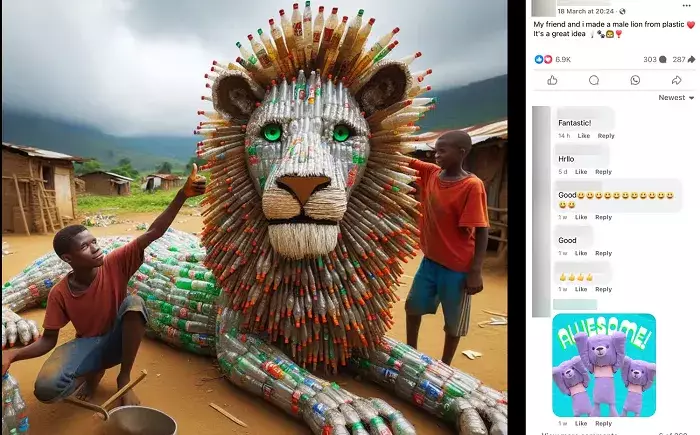

As the use of generative AI continues to grow, platforms like Meta are facing a new challenge in the form of synthetic content flooding their platforms. This flood of AI-generated posts has been gaining significant engagement on social media platforms like Facebook, despite the obvious signs that the content is not real. While some users may be able to discern the authenticity of such content, not everyone has the digital literacy to do so. As a result, Meta has recognized the need to update its AI labeling policies to ensure that more AI-generated content is properly tagged and disclosed to users.

Meta’s existing approach to labeling AI content was deemed too narrow, as it primarily focused on videos that were altered by AI to make it seem like a person was saying something they did not say. The company acknowledged that the landscape of AI-generated content has evolved rapidly, with realistic AI-generated photos and audio now becoming more prevalent. Therefore, Meta has decided to broaden its labeling policies to include a wider range of synthetic content, with new “Made with AI” labels being added to content that exhibits industry-standard AI indicators or when users disclose that the content is AI-generated.

The introduction of these new labels serves a dual purpose – providing information to users about the authenticity of the content and educating them about the capabilities of AI technology. By clearly labeling AI-generated content, Meta aims to increase awareness among users about the existence of such technology and the potential for misuse. Moreover, the labels will also help users make more informed decisions about the content they consume and share, as well as providing context if they come across similar content elsewhere.

While the updated labeling policies are a step in the right direction towards increasing transparency in AI content, there are challenges that Meta may face in effectively implementing them. As AI technology continues to advance, detecting generative AI content within posts may become more difficult, limiting Meta’s automated capacity to flag such content. However, the new approach also empowers Meta’s moderators to enforce these labeling policies more effectively, contributing to a safer online environment for users.

Meta’s decision to update its AI content labeling policies is a positive step towards increasing transparency and accountability on its platforms. By providing users with clear information about the authenticity of AI-generated content, Meta is helping to raise awareness about the capabilities and risks associated with synthetic media. While there may be challenges in implementing these policies, the overall impact is likely to be beneficial in promoting a more informed and responsible use of AI technology.

Leave a Reply